Image by Author

The Gemini 2.5 Pro model, developed by Google, is a state-of-the-art generative AI designed for advanced multimodal content generation, including text, images, and more.

In this article, we will explore three APIs that allow free access to Gemini 2.5 Pro, complete with example code and a breakdown of the key features each API offers.

1. Google AI Studio

Google AI Studio provides direct access to Gemini 2.5 Pro through its API, enabling developers to generate responses, perform multimodal tasks, and more.

Key Features:

- Multimodal Capabilities: Supports audio, images, videos, and text.

- Enhanced Reasoning: Advanced thinking, reasoning, and multimodal understanding.

- Scalability: Easily deploy large-scale applications with Vertex AI.

- Ease of Use: The SDK simplifies integration and usage.

Here’s how you can get started:

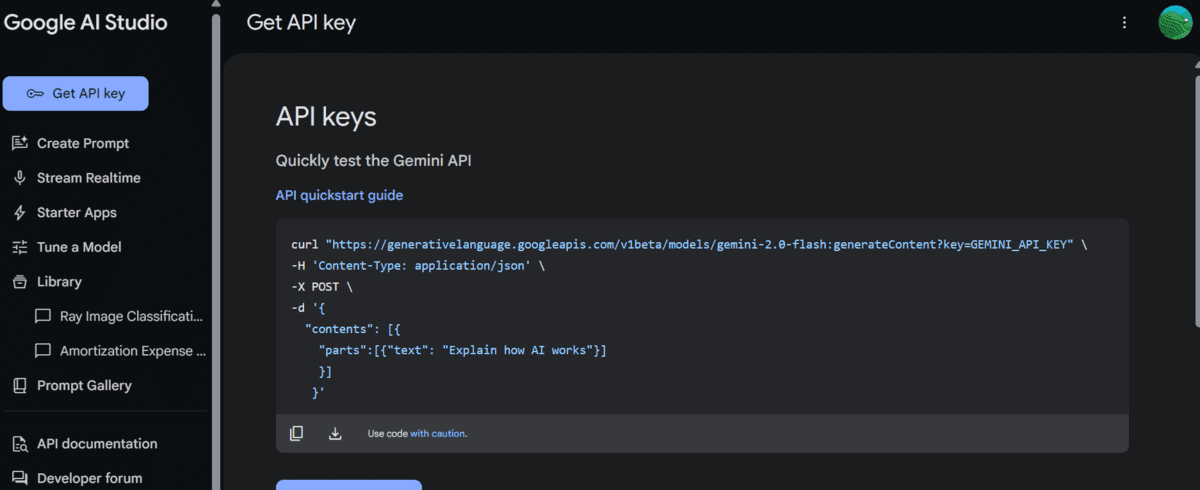

- Visit Google AI Studio, sign in with your Google account, and generate the API key.

- Copy the API key and save it as an environment variable in your system.

Screenshot from Google AI Studio

- Install the Google GenAI Python client:

pip install -q -U google-genai

- Initialize the client using the API key.

- Use the client to generate content by specifying the model name and prompt.

from google import genai

import os

client = genai.Client(api_key=os.environ["GEMINI_API_KEY"])

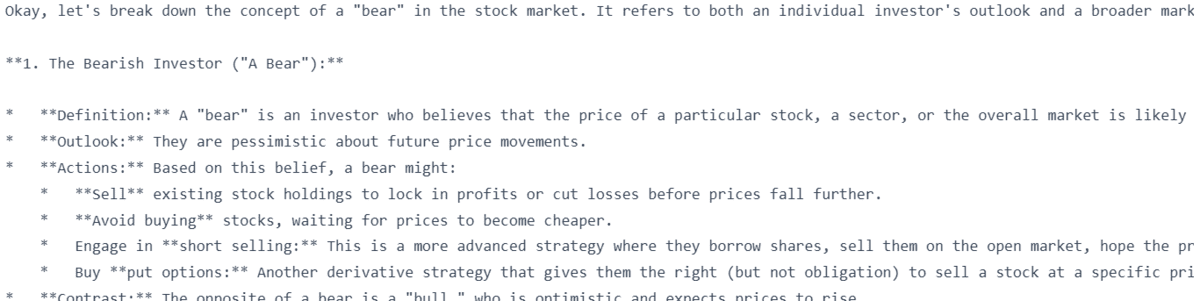

response = client.models.generate_content(

model="gemini-2.5-pro-exp-03-25", contents="Explain the concept of bear in the stock market."

)

print(response.text)

In return, you will receive a highly accurate and detailed response.

2. OpenRouter

OpenRouter is a versatile API gateway that supports multiple AI models, primarily large language models from popular providers like Anthropic, Google, and Meta. It allows developers to interact with the model using a unified interface. This means you only need to create the client using the API key to access any provider or AI model seamlessly.

Key Features:

- Model Routing: Seamlessly switch between models.

- Provider Routing: Access models from multiple providers.

- Multimodal Access: Upload images, audio, files, and text, and generate text or images in return.

- Flexible Pricing: Access both free and paid models.

Here’s how you can get started:

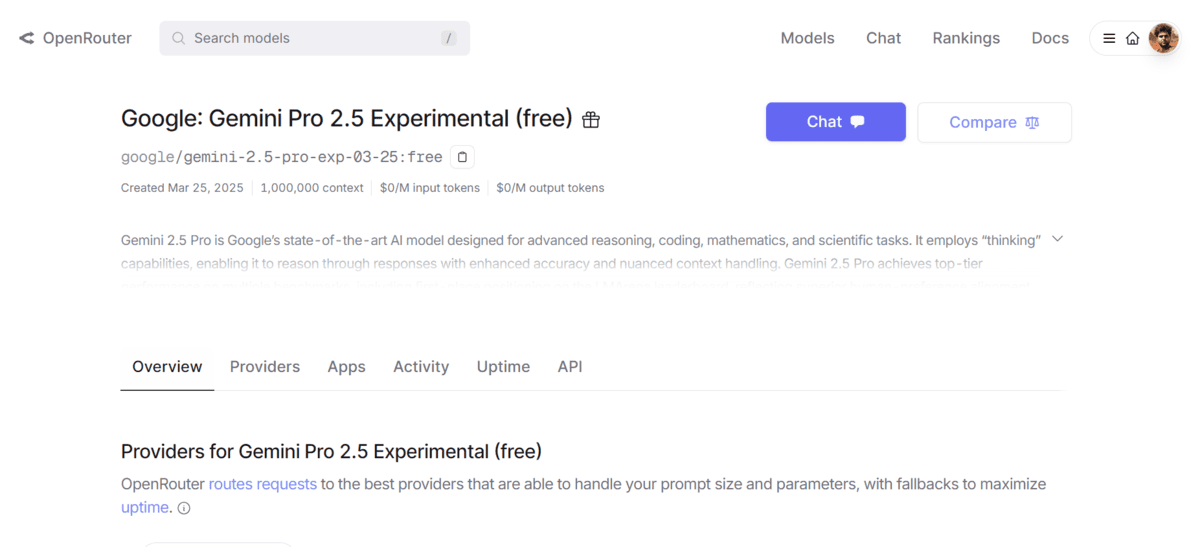

- Visit the OpenRouter website and create an account.

- Generate the API key and save it as an environment variable in your working environment.

Screenshot from OpenRouter

You can access the API using the Python request package, OpenAI client, or CURL command.

- Install the OpenAI API Python client.

- Initialize the client using the base URL and API key.

- Generate a response by providing the completion function with the model name and the message, as shown below.

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=os.environ["OPENROUTER"],

)

completion = client.chat.completions.create(

model="google/gemini-2.5-pro-exp-03-25:free",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

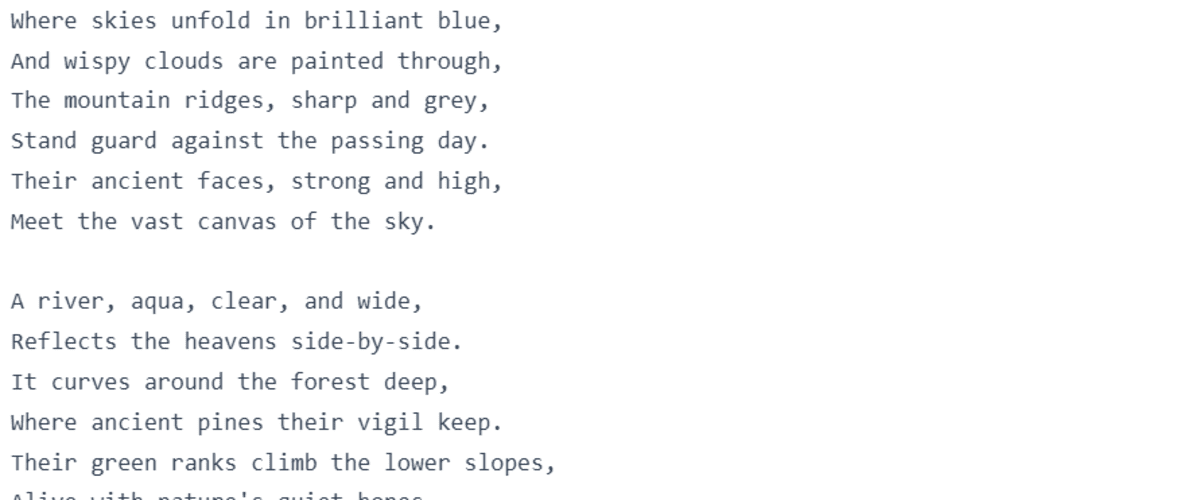

"text": "Write the poem about the image."

},

{

"type": "image_url",

"image_url": {

"url": "https://www.thewowstyle.com/wp-content/uploads/2015/02/beautiful_mountain-wide.jpg"

}

}

]

}

]

)

print(completion.choices[0].message.content)

As you can see, the model understood the image and wrote a poem about it.

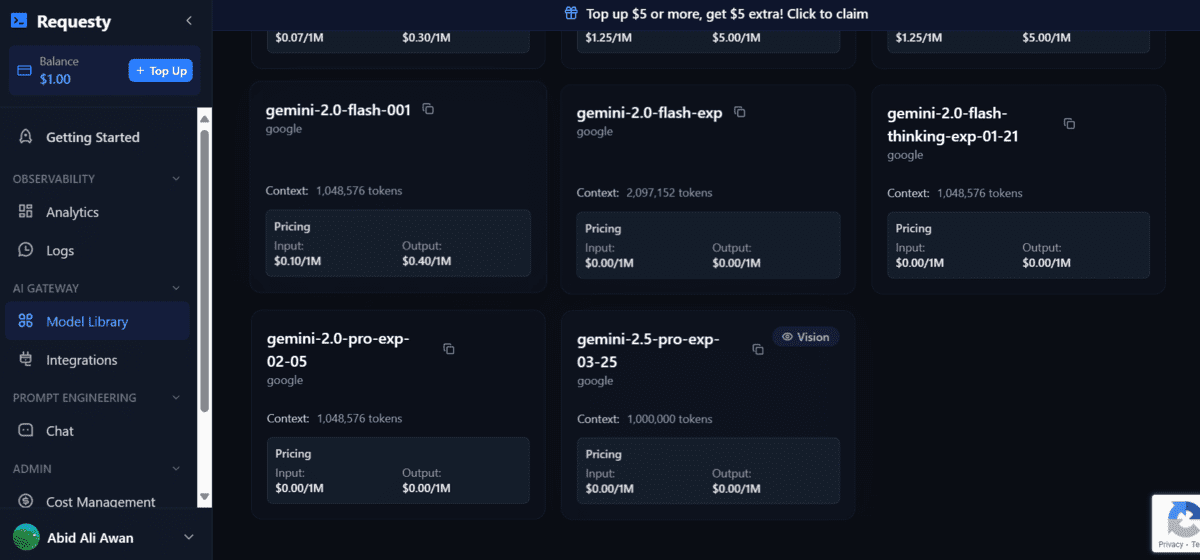

3. Requesty

Requesty is a development platform designed for seamless integration with large language models like Gemini 2.5 Pro. It features intelligent LLM routing, allowing it to switch between models for better performance based on the request.

Key Features:

- Developer-Friendly: Simplifies integration with Gemini 2.5 Pro.

- Smart Routing: Automatically selects the best model for your task.

- Interactive Dashboard: Monitor and manage your API usage.

- Scalability: Designed for production-grade applications.

Here’s how you can get started:

- Go to the Requesty website and create the account.

- While creating the account, you will be guided to generate your first API key. Save that key as an environment variable for later use.

Screenshot from Requesty

- Using the OpenAI Python client, create the client with the base URL and API key.

- Provide the chat completion function with the model name and the prompt.

from openai import OpenAI

client = OpenAI(

base_url="https://router.requesty.ai/v1",

api_key=os.environ["REQUESTY"],

)

completion = client.chat.completions.create(

model="google/gemini-2.5-pro-exp-03-25",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Help me understand life in general."

},

]

}

]

)

print(completion.choices[0].message.content)

As a result, you will receive a well-written response.

Summary

We have discovered three AI model providers that allow us to access the new Gemini 2.5 Pro for free using their API. Here is the summary:

- Google AI Studio: Best for direct access to Gemini 2.5 Pro with robust SDK support and Vertex AI integration.

- OpenRouter: Ideal for developers needing a unified API gateway for multiple models and multimodal tasks.

- Requesty: A developer-centric platform with smart routing and production-grade scalability.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.