Large language models (LLMs) have become indispensable tools that change the way we work. It’s already proven that many companies and individuals have started relying on them. The community is also vibrant, where many open-source approaches have been developed.

One of the challenges in the current open-source implementation is the lack of highly performant multilingual models that could compete with monolingual models. That’s why a dedicated team at CohereAI tries to develop models that would perform well across multiple languages called Aya Expanse.

This article will explore CohereAI’s Aya Expanse and how to apply it in your works.

Aya Expanse In Brief

As mentioned above, the Aya Expanse family is a Multilingual model developed by CohereAI at C4AI. It’s several years of research dedication to produce a model that can perform well across 23 different languages and could rival many current SOTA models.

The currently released models are the Aya Expanse 8B and 32B parameter models. Both models are shown to have excellent multilingual performances, although the 32B parameters offer the SOTA capabilities.

Cohere teams also released the Aya Expanse training dataset called Aya collection and their critical evaluation set (multilingual performance and safety) for the community. These datasets could be your reference to train your multilingual models.

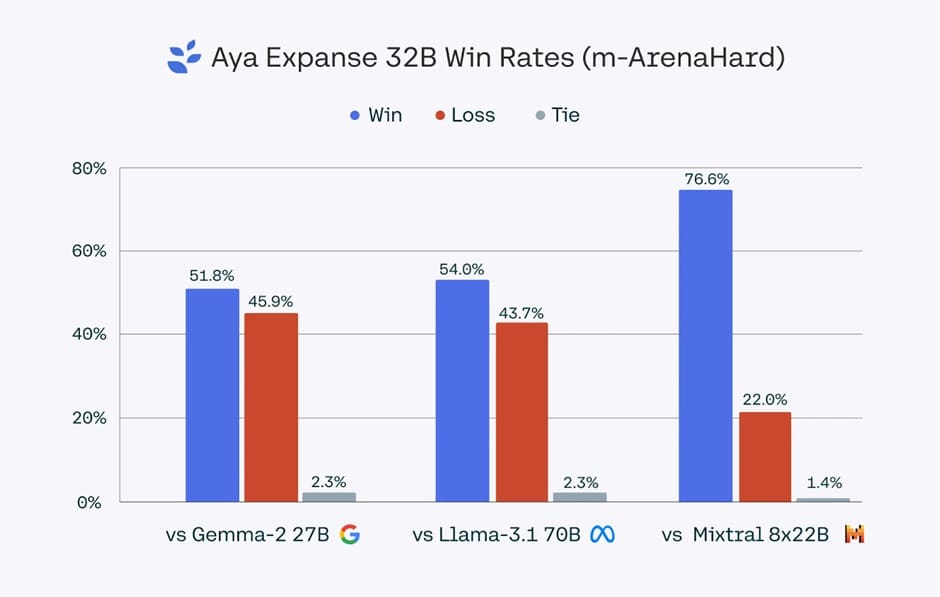

Let’s look at Aya Expanse’s performance against other models in the market. Aya Expanse has better win-rate metrics in the m-ArenaHard evaluation set, as shown in the image below.

Aya Expanse 32B Win Rates | CohereAI

Aya Expanse 32B Win Rates | CohereAIWe can see that the Aya Expanse 32B has a better win rate overall in 23 languages against the SOTA model, such as Gemma 2 27B, Mistral 8x22B, and Llama 3.1 70B. This shows that Aya Expanse can become an LLM serving any business case involving multilingual problems.

That’s a simple introduction to Aya Expanse. Next, let’s try out the Aya Expanse model to understand why they are great at solving multilingual use cases.

Trying Out Aya Expanse

We will try out the HuggingFace space offered by Aya Expanse and the model via HuggingFace Transformers.

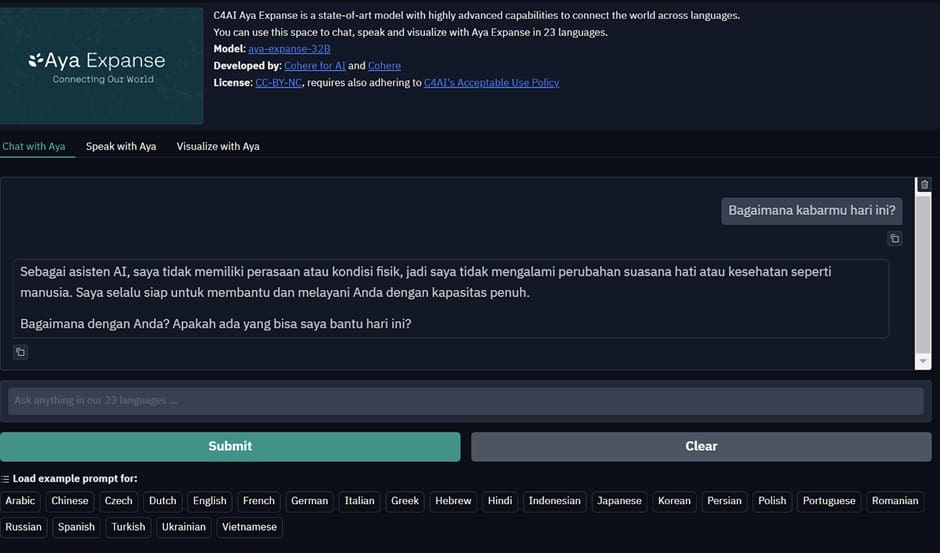

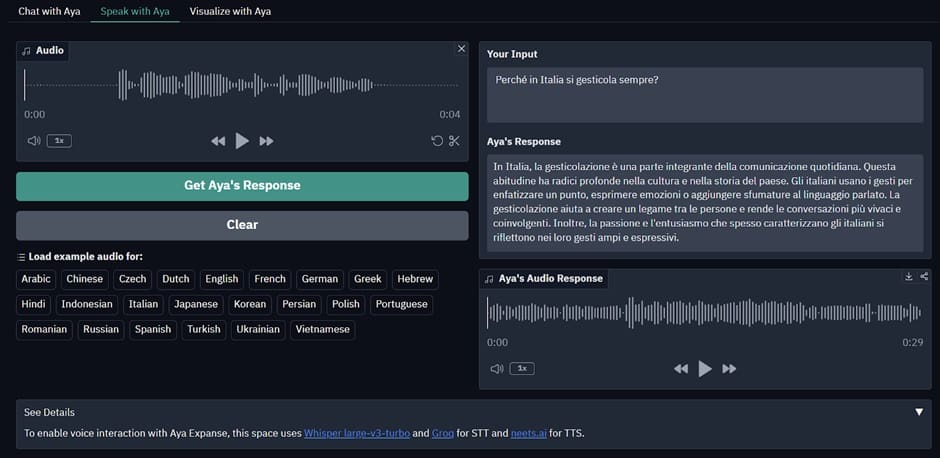

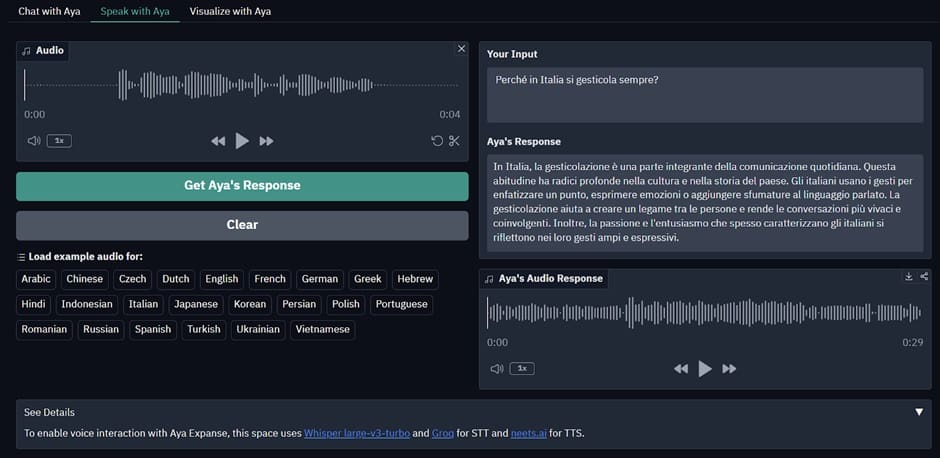

If you only want to test the model without needing Python code implementation, we can visit the Aya Expanse HuggingFace Space. It’s a UI-based space where we can chat, speak, or visualize with the model.

For example, we can explore the chat features with various languages in the image below.

You can also speak with Aya by passing your voice input and acquiring results from the model.

Lastly, you can generate images within the HuggingFace space.

We use three different languages for each feature we trying out. It shows how powerful Aya Expanse models are in understanding other languages without needing specific retraining.

Let’s try out the Python implementation of Aya Expanse with HuggingFace.

We will use the standard HuggingFace Transformers library for our tutorial and access the model from HuggingFace. To do that, you can install the following library.

pip install transformers huggingface_hub

We will use the Aya Expanse 8B for the tutorial to test the multilingual capability. As the model is gated, you must request access and have a HuggingFace API key to download the model. Secure both things before we move on to the tutorial.

Next, log in to the HuggingFace hub to access the Aya Expanse model.

from huggingface_hub import login

login('hf_api_key')

Download the Aya Expanse model and tokenizer once you have logged in to the platform.

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "CohereForAI/aya-expanse-8b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

Let’s try out several experiments to test the Multilingual capability of Aya Expanse. First, we will develop a function to generate text quickly with the model.

def generate_text(messages):

input_ids = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt")

gen_tokens = model.generate(

input_ids,

max_new_tokens=100,

do_sample=True,

temperature=0.3,

)

gen_text = tokenizer.decode(gen_tokens[0])

print(gen_text)

Using the function above, I would try to ask the Aya Expanse model to do creative writing in Turkish.

#Creative Writing

messages = ["role": "user", "content": "Bir yaz gecesi yıldızlar altında geçen bir aşk hikayesi yaz"]

generate_text(messages)

Output:

Gecenin serin havası, yazın sıcaklığını yumuşatırken, gökyüzü sayısız yıldızla parlıyordu. Bu büyüleyici manzaraya tanık olan genç çift, Elif ve Can, bir yaz gecesinin büyüsüne kapılmışlardı.

Elif, uzun, dalgalı saçları gece rüzgarıyla dans ederken, gözleri yıldızları izliyordu. Yüzündeki masum gülümseme, onun

The model could understand our prompt well and create output as we expect. Let’s try out another example for translation from Italian to English.

#Translation

messages = ["role": "user", "content": "Traduisez cette phrase en anglais: 'Non tutte le ciambelle riescono col buco'"]

generate_text(messages)

Output:

La traduction de la phrase italienne « Non tutte le ciambelle riescono col buco » en anglais est : « Not all donuts have a hole. »

The model can translate the text well and understand the grammar of the translated language.

Let’s see what happens if the assistant role is in a different language than the user role.

messages = [

"role": "user", "content": "Weather in Jakarta is raining. How about in Paris?",

"role": "assistant", "content": "Di Paris, cuaca saat ini cukup sejuk dan menyenangkan. Suhu biasanya berkisar antara 20-25 derajat Celcius.",

]

generate_text(messages)

Output:

Karena Anda bertanya tentang cuaca di Paris, dan informasi terakhir saya adalah hingga tahun 2023, saya tidak memiliki akses ke data cuaca real-time. Namun, secara umum, cuaca di Paris pada musim ini (apabila Anda merujuk pada musim gugur atau awal musim dingin) cenderung bervariasi.

Pada bulan-bulan seperti September, Oktober, dan November, Paris dapat mengalami cuaca yang berubah-ubah. Ini bisa mencakup hari-hari yang cerah dan hangat

We can see the assistant role causing the response to switch language even though the user inputs the prompt in a different language.

Lastly, let’s try out the model if multiple languages exist in one prompt.

messages = ["role": "user", "content": "¿Cómo se dice 'I love learning languages' en español y cuál es el mejor método para aprenderlos?"]

generate_text(messages)

Output:

La frase 'I love learning languages' se traduce al español como 'Me encanta aprender idiomas'.

En cuanto al mejor método para aprender idiomas, no existe una respuesta única ya que la efectividad puede variar según las preferencias y el estilo de aprendizaje de cada persona. Sin embargo, aquí hay algunos enfoques y técnicas ampliamente recomendados que pueden facilitar el proceso de aprendizaje:

1. **Establecer objetivos claros:** Define tus metas de aprendizaje. ¿Quieres alcanzar fluidez,

The model can understand our intention well even if multiple languages exist in the text.

That’s all our exploration of Aya Expanse. You can try them out in any multilingual use cases you have.

Conclusion

Aya Expanse is a multilingual model developed by CohereAI that can perform well across 23 languages and rival many current SOTA models. This article has explored how to use Aya Expanse with various examples.

I hope this has helped!

Cornellius Yudha Wijaya is a data science assistant manager and data writer. While working full-time at Allianz Indonesia, he loves to share Python and data tips via social media and writing media. Cornellius writes on a variety of AI and machine learning topics.