Image created by Author using Midjourney

Introduction

The process of deploying machine learning models is an important part of deploying AI technologies and systems to the real world. Unfortunately, the road to model deployment can be a tough one. The process of deployment is often characterized by challenges associated with taking a trained model — the culmination of a lengthy data-preparation and model-training process — to the world at-large, where it must continue to perform well. These challenges arise as the machine learning model must endure while the environment in which it exists changes, often dramatically.

To help bring awareness to some of the problems (and solutions) associated with deploying models, a series of tips, meant to guide beginners and practitioners alike in best practices, are set out below. These tips cover a range of topics, from optimization and containerization, to deployment processes such as CI/CD, to monitoring and security best practices, aimed at making deployment more ready for primetime.

1. Optimize Your Models for Production

In the lab, machine learning models can make production-related sacrifices in the name of development. However, in the real world, machine learning models require optimization if they are to realize their design benefits. Without optimization, a model likely cannot deal with the latency demands placed upon it by inference applications, perhaps using too many valuable CPU cycles or hogging much memory to be useful in realistic conditions.

Enhancements such as quantization, pruning, and knowledge distillation offer the promise of optimizing models. Quantization can reduce the number of bits used to represent weights, resulting in a smaller model and faster inference time. Pruning eliminates decidedly unnecessary weights, resulting in a streamlined model. Knowledge distillation can help transfer knowledge between a larger model and a smaller one, improving training efficiency.

Tools such as TensorFlow Lite and ONNX are capable of contributing to model optimization. Capable of optimizing model during conversion, these tools reducing the effort required to make them ready for inference, in various settings, including embedded and mobile.

2. Containerize Your Application

Containerization packages your machine learning models and any of its dependencies and requirements, creating a single deployable artifact that can run in essentially any environment. Containerization affords the ability to run your model on any system that can run the particular brand of container, independent of the exact installation or configuration of the host system.

Docker is a popular tool for performing containerization. Docker helps create encapsulated and portable software packages. Kubernetes can effectively manage the lifecycle of containers. Using a host of development and operational best practices, you could prepare, for example, a containerized Django application using a custom API and accessing a machine learning model for Kubernetes deployment.

Containerization is an essential skill for machine learning engineers these days, and while overlooking this seemingly tangential set of tools in favor of supplementing more machine learning knowledge, doing so would be a mistake. Containerization can become an important early step in the process of widening your audience and being able to easily manage your project in production.

3. Implement Continuous Integration and Continuous Deployment

Continuous integration and continuous deployment (CI/CD) can improve the reliability of your model deployment. Different such techniques and tools enable the automation of testing, deployment, and updates of models.

CI/CD approach makes exception handling in system testing, deployment, and rollout of reloads and updates seamless. Of note, the issues that can arise when preparing for and using CI/CD for machine learning are not any more troublesome than in the deployment of software. This has helped make its adoption in machine learning model deployment and management widespread.

The CI and CD processes can be carried out utilizing CI/CD tools like Jenkins and Github Actions. Jenkins is an open-source automation server, enabling developers to build, test, and deploy their code reliably. GitHub Actions provides flexible CI/CD workflows directly integrated with GitHub repositories. They both offer value in automating the entire software development and machine learning model deployment and management lifecycles, all via the definition tooling pipelines in convenient configuration files.

4. Monitor Model Performance in Production

Model performance needs to be kept under careful observation once the model is in production so that the model continues to deliver the desired value to the organization. Various tools help us to monitor performance, but there is more to monitoring performance than merely watching numbers flash by in a terminal window. For instance, while various tools and methods can be used for monitoring machine learning model accuracy, response time, and resource usage, one should also adopt a solid logging package and strategy as well. Tools like Kubernetes have a dashboard that can show response times, the outcome of key transactions, and request rate.

In addition, it’s crucial to establish automated alerting systems that notify the machine learning operations engineers, and other stakeholders, of any significant deviations in model performance. This proactive approach allows for immediate investigation and remediation, as opposed to whenever they are noticed during routine log inspections, minimizing potential disruptions. Is your model experiencing drift? It would be better to know about it now.

Lastly, the integration of this assortment of monitoring solutions with a centralized dashboard can provide a holistic view of all deployed models, enabling more effective management and optimization of machine learning operations. Don’t overlook the value of “all in one place.”

5. Ensure Model Security and Compliance

We will conclude with something that is ubiquitously obvious from an application perspective, but often overlooked from a model point of view: security is of the utmost importance. Explicitly stated: don’t neglect to take into account compliance and security when deploying models. The different forms security breaches can take are numerous, and the implications of not protecting data are tremendous.

To safeguard machine learning models, there are a few simple steps you can take that can have a huge impact. Start by safeguarding models while they are at rest and during transit, and by limiting who has access to models and their inputs. Fewer potential points of access means fewer potential breach opportunities. The model’s architecture can also play a role in defense, so be aware of your model’s internal structure. Finally, regulatory compliance is another important issue and must be considered when deploying models for a whole host of reasons, from being a responsible player to avoiding sanction from authorities.

Summary

We are reminded that deploying machine learning models involves several key practices. Optimization helps models run better. Containerization is important for combinations of systems now and at scale. Continuous integration and continuous deployment help to continue delivering predictions rapidly and accurately. And monitoring and ensuring security and compliance help secure models and keep them available to their users.

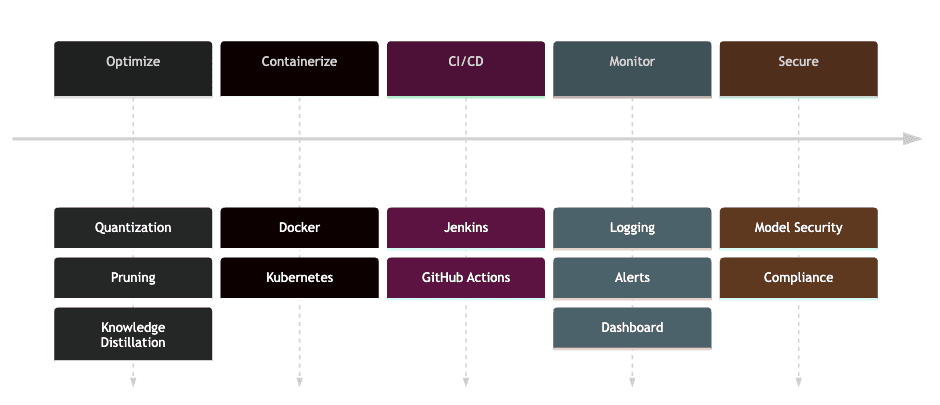

Development to Production: A timeline with example tasks and tools

Visualization created by Author

By keeping these practices in the forefront of your mind prior to their deployment, your machine learning models will stand their best chance to achieve the results necessary for success.