Three.js has been gradually rolling out the new WebGPURenderer, built on WebGPU, while also introducing their node system and the Three Shading Language. It’s a lot to unpack, and although it’s not fully production-ready yet, it shows great promise.

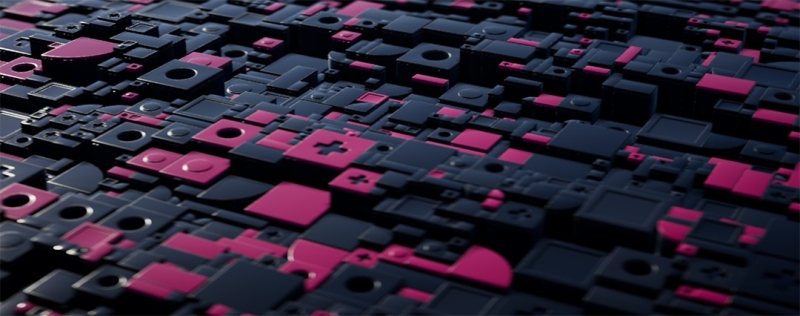

In this article, I’ll walk you through an experimental Three.js demo using the new WebGPURenderer, BatchedMesh, and some playful post-processing effects to push the limits of real-time 3D on the web.

The Demo

This demo highlights the potential of Three.js’s latest features by showcasing:

- The BatchedMesh object, which allows for fast rendering of a set number of geometries

- Ambient occlusion and depth of field post-processing passes

- Some light use of TSL

In the demo, the BatchedMesh is randomly composed and then animated along fractal noise with interactive pointer controls, plus a light-to-dark mode switch with additional animations.

WebGPURenderer

WebGPURenderer is the evolution of the WebGLRenderer in Three.js. It aims to be a unified interface for both WebGL/2 and WebGPU back-ends within a streamlined package. Many features from the existing renderer have already been ported, with appropriate fallbacks depending on the user’s device.

The BatchedMesh works with both WebGLRenderer and WebGPURenderer, but I encountered some fallback issues on certain browsers. Additionally, I wanted to use the new post-processing tools, so this demo runs exclusively on WebGPU.

It’s initialized exactly the same as the WebGLRenderer:

Demo.ts line 52

this.renderer = new WebGPURenderer({ canvas: this.canvas, antialias: true });

this.renderer.setPixelRatio(1);

this.renderer.setSize(window.innerWidth, window.innerHeight);

this.renderer.toneMapping = ACESFilmicToneMapping;

this.renderer.toneMappingExposure = 0.9;When working with WebGPU in Three.js be sure to import from “three/webgpu” as it is its own branch.

BatchedMesh

The BatchedMesh is a recent addition to the Three.js toolkit, offering automatic optimization for rendering a large number of objects with the same material but different world transformations and geometries. Its interface and usage are very similar to that of InstancedMesh, with the key difference being that you need to know the total number of vertices and indices required for the mesh.

Demo.ts line 269

const maxBlocks: number = this.blocks.length * 2; // top and bottom

this.blockMesh = new BatchedMesh(maxBlocks, totalV, totalI, mat);

this.blockMesh.sortObjects = false; // depends on your use case, here I've had better performances without sortingThere are two important options to balance for performance, both dependent on your specific use case:

- perObjectFrustumCulled: defaults to true, meaning each individual object is frustum culled

- sortObjects: sorts the objects in the BatchedMesh to reduce overdraw (rendering already painted fragments)

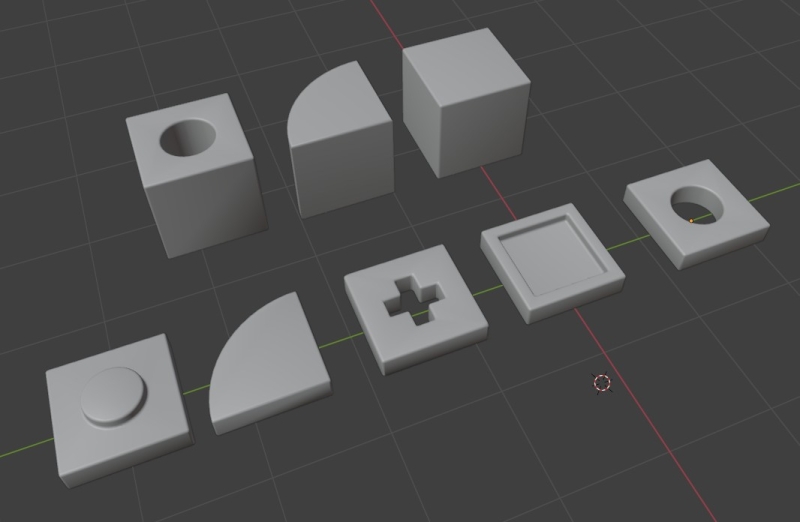

Once the object is created, we need to define what it contains. In the demo, I have a total of nine different geometries to display—three bottom parts and six top parts. These geometries need to be passed to the BatchedMesh so it knows what it is working with:

Demo.ts line 277

const geomIds: number[] = [];

for (let i: number = 0; i < geoms.length; i++) {

// all our geometries

geomIds.push(this.blockMesh.addGeometry(geoms[i]));

}The addGeometry function returns a unique ID that you can use to associate an object instance in the BatchedMesh with its geometry.

Next, we need to specify which instance uses which geometry by simply linking the geometry IDs to the instance IDs:

Demo.ts line 283

// one top and one bottom for each block

for (let i: number = 0; i < this.blocks.length; i++) {

const block: ABlock = this.blocks[i];

this.blockMesh.addInstance(geomIds[block.typeBottom]);

this.blockMesh.addInstance(geomIds[block.typeTop]);

this.blockMesh.setColorAt(i * 2, block.baseColor);

this.blockMesh.setColorAt(i * 2 + 1, block.topColor);

}Here, I use the block definitions I previously generated to match each block with two instances in the mesh—one for the top geometry and another for the bottom. If you’re going to use the color attribute, you’ll also need to initialize it before rendering.

In the render loop, we pass transformation matrices and colors to the mesh following the same indexing we pre-established, which is in the same order as the blocks array. The matrices are created by manipulating a dummy object.

Demo.ts from line 375

block = blocks[i];

// our indices for this block in the batched mesh, a top and a bottom

baseI = i * 2;

topI = i * 2 + 1;

...

// update the block mesh with matrices and colors

// first the bottom, color changes on the first ripple

dummy.rotation.y = block.rotation;

dummy.position.set(blockCenter.x, 0, blockCenter.y);

dummy.scale.set(blockSize.x, block.height, blockSize.y);

dummy.updateMatrix();

blockMesh.setMatrixAt(baseI, dummy.matrix);

blockMesh.getColorAt(baseI, tempCol);

tempCol.lerp(this.baseTargetColor, ripple);

blockMesh.setColorAt(baseI, tempCol);

// then the top, color changes on the second ripple

dummy.position.y += block.height;

dummy.scale.set(blockSize.x, 1, blockSize.y);

dummy.updateMatrix();

blockMesh.setMatrixAt(topI, dummy.matrix);

blockMesh.getColorAt(topI, tempCol);

tempCol.lerp(this.topTargetColors[block.topColorIndex], echoRipple);

blockMesh.setColorAt(topI, tempCol);Post-Processing / TSL / Nodes

Alongside the rollout of WebGPURenderer, Three.js has introduced TSL, the Three Shading Language—a shader-like, node-based JavaScript interface for GLSL / WGSL that you can use to write shaders, including compute shaders.

In this demo, TSL is used primarily to define the rendering and post-processing pipeline. However, there are also numerous interactions with the new node-based materials, which are equivalent to standard Three.js materials (for example, MeshStandardNodeMaterial instead of MeshStandardMaterial).

Here, I aimed to create a tilt-shift effect to emphasize the playfulness of the subject and colors, so I implemented a strong depth of field effect with dynamic parameters updated each frame using a simple auto-focus approximation. I also added extra volume with screen-space ambient occlusion, a vignette effect, and some anti-aliasing.

Everything is defined in a few lines of TSL.

Demo.ts line 114

const scenePass = pass(this.scene, this.camera);

scenePass.setMRT(mrt({

output: output,

normal: transformedNormalView

}));

const scenePassColor = scenePass.getTextureNode('output');

const scenePassNormal = scenePass.getTextureNode('normal');

const scenePassDepth = scenePass.getTextureNode('depth');

const aoPass = ao(scenePassDepth, scenePassNormal, this.camera);

...

const blendPassAO = aoPass.getTextureNode().mul(scenePassColor);

const scenePassViewZ = scenePass.getViewZNode();

const dofPass = dof(blendPassAO, scenePassViewZ, effectController.focus, effectController.aperture.mul(0.00001), effectController.maxblur);

const vignetteFactor = clamp(viewportUV.sub(0.5).length().mul(1.2), 0.0, 1.0).oneMinus().pow(0.5);

this.post.outputNode = fxaa(dofPass.mul(vignetteFactor));Many of the functions used are nodes of various types (e.g., pass, transformedNormalView, ao, viewportUV, etc.), and there are already a lot of them in the library. I encourage everyone to explore the Three.js source code, read the TSL documentation, and browse the provided examples.

Some notable points:

- viewportUV returns the normalized viewport coordinates, which is very handy.

- The properties of effectController are defined as uniform() for use in the shader. When these properties are updated at runtime, the shader updates as well.

- Most common GLSL operations have a TSL equivalent that you can chain with other nodes (e.g., clamp, mul, pow, etc.).

In the render loop, I adjust the focus and aperture values with elastic damping to simulate an auto-focus effect:

Demo.ts line 455

this.effectController.focus.value = MathUtils.lerp(this.effectController.focus.value, this.camDist * .85, .05);

this.effectController.aperture.value = MathUtils.lerp(this.effectController.aperture.value, 100 - this.camDist * .5, .025);Conclusion

When writing the original code for this demo, I was simply experimenting with the latest Three.js release, trying to imagine a web use case for the BatchedMesh—hence the slightly over-the-top light/dark mode toggle.

With this short article, I hope to encourage some of you to dive into Three.js and explore these new features. While the WebGPURenderer isn’t yet as compatible with all browsers and devices as the original, we’re getting there.

Thanks for reading!