Transformers is an architecture of machine learning models that uses the attention mechanism to process data. Many models are based on this architecture, like GPT, BERT, T5, and Llama. A lot of these models are similar to each other. While you can build your own models in Python using PyTorch or TensorFlow, Hugging Face released a library that makes it easier to create these models and provides many pre-trained models you can use. The name of the library is uninteresting, just transformers. In this article, you will learn how to use this library.

Let’s get started.

A Gentle Introduction to Transformers Library

Photo by sdl sanjaya. Some rights reserved.

What is the transformers library?

The transformers library is a Python library that provides a unified interface for working with different transformer models. Not exhaustively, but it defined many well-known open-source models, like GPT, BERT, T5, and Llama. While it is not the official library for many of these models, the architectures are the same. The beauty of this library is that it unified the interface for different models. For example, you know that BERT and T5 can both generate text; you don’t need to know the architectural differences between the two but still use them via the same function call.

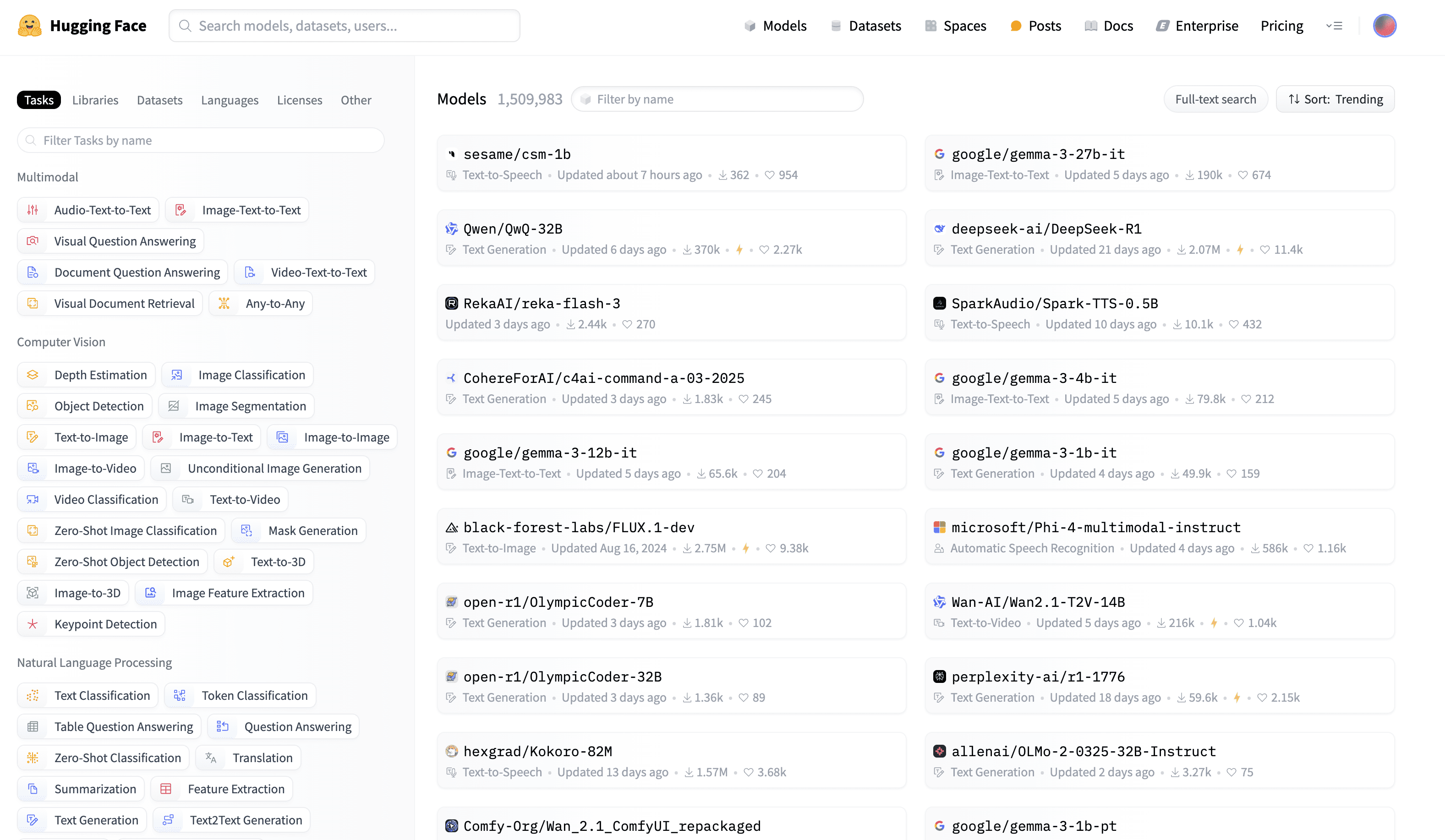

Hugging Face Hub is a repository of resources for machine learning, including the pre-trained models. As a user of the models, you can download and use them in your projects without knowing much about the mechanisms behind them. If you want to use a model, such as GPT, you can simply find the name of the model in the hub and use it in the transformers library. The library will download the model, figure out what architecture it is using, then create the model and load the weights for you, all in one line of code.

Hugging Face Hub

The transformers library makes it easy to use the transformer models in your projects without requiring you to become an expert in these machine learning models.

Installation

The installation is straightforward. You can install the library using pip:

This will install the library and all the dependencies. The supported models are usually built with three frameworks: PyTorch, TensorFlow, and JAX/Flax. You can decide to use one of them when calling the library. Most of the models default to PyTorch.

This is enough to start a project, such as creating and training a new model on your own data. However, some of the pre-trained models on Hugging Face Hub would require you to have a Hugging Face account. You can sign up for one for free. Moreover, some models are “gated,” meaning you need to request access to use them. Using these models from the transformers library would require you to authenticate yourself with an access token.

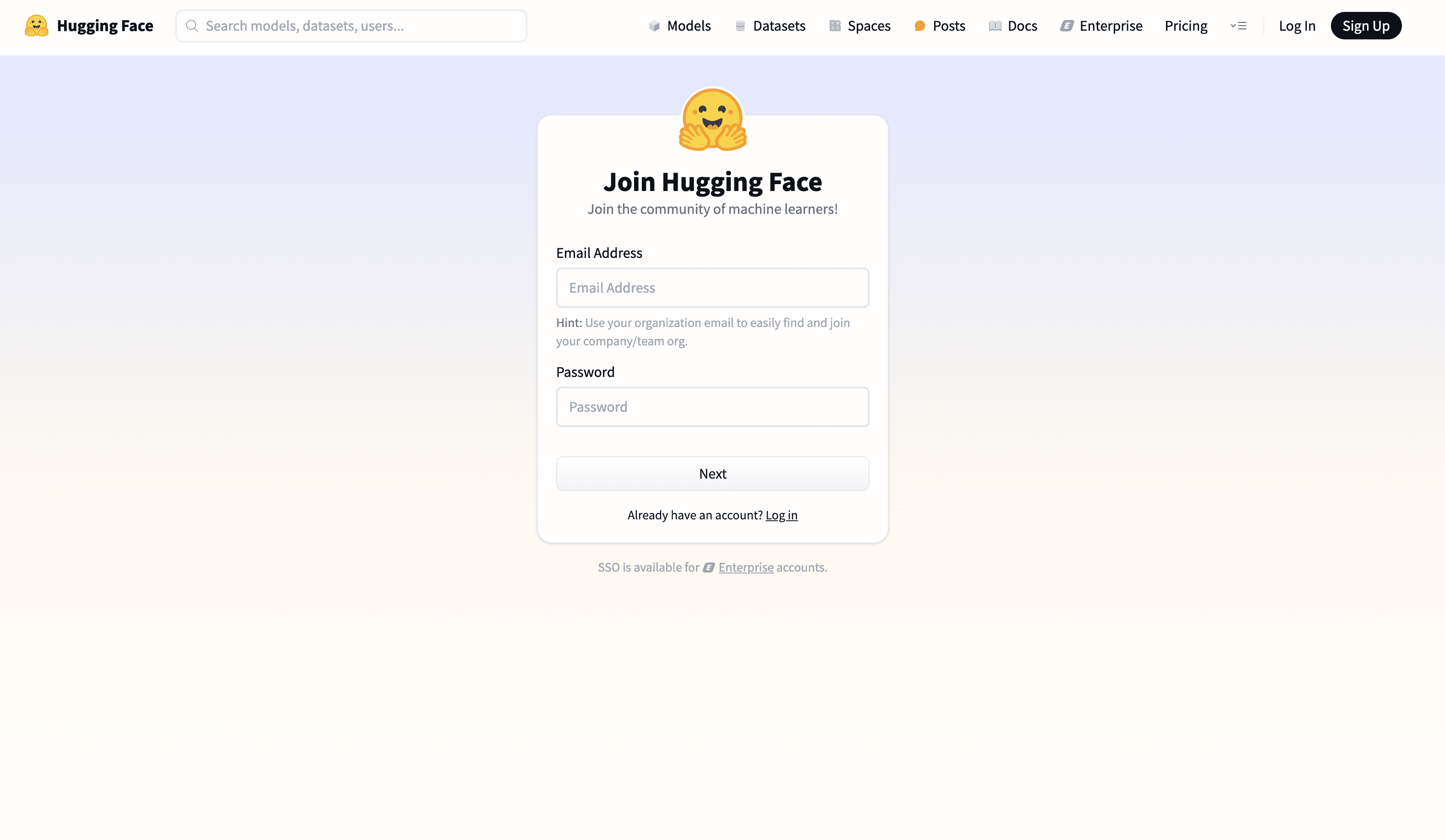

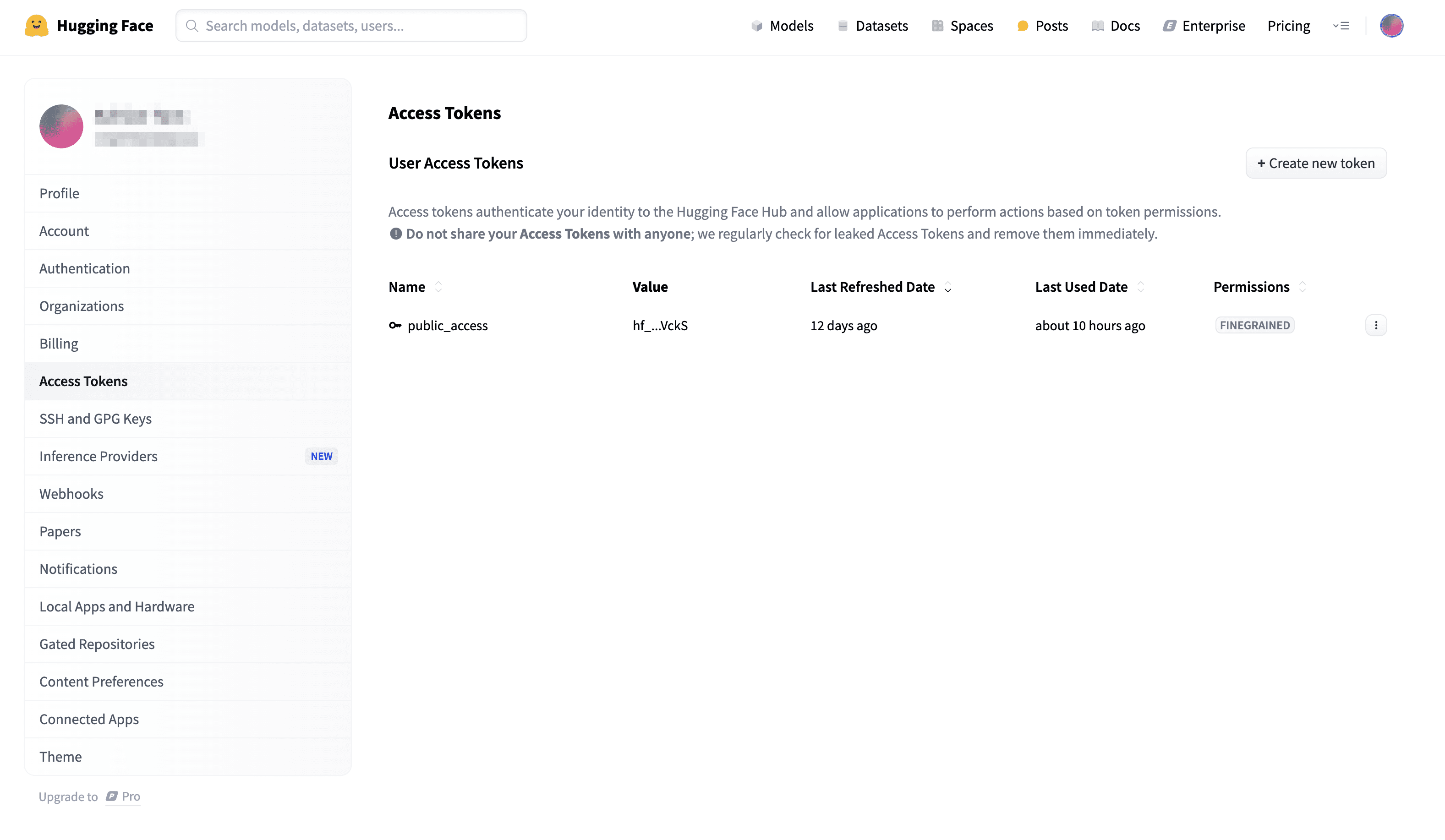

To sign up for a Hugging Face account, visit the website https://huggingface.co/join. Once you have an account, you can create an access token. You can do this by going to the access tokens page. Once you have the token, you should remember it, as it is displayed only once when you create the token.

Signing up for an account

Creating an access token to use with the transformers library

Using the library

The library is very easy to use. Let’s see how to use it to load a pre-trained model.

|

import torch from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = “bert-base-uncased” tokenizer = AutoTokenizer.from_pretrained(model_id) model = AutoModelForCausalLM.from_pretrained(model_id) input_ids = tokenizer(“Hello, world!”, return_tensors=“pt”) with torch.no_grad(): outputs = model(**input_ids) output_tokens = outputs.logits.argmax(dim=–1) output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True) print(output_text) |

This is not the shortest code to use the transformers library, but it shows the main idea. It created a tokenizer that converts the input text into integer tokens, then created a model to process these tokens and return the output.

All pre-trained models are identified by a model ID. When you create a tokenizer that a pre-trained model requires, it will check with the pre-trained model’s config to instantiate the correct tokenizer object, similarly, for the model. Therefore, you just need to use AutoTokenizer and AutoModel instead of the specific classes, such as BertTokenizer and BertModel. Knowing how a transformer model usually works, you should expect the core model to take the input tokens and output logit tensors. Therefore, you used argmax above to convert the logits to token IDs and convert the IDs to strings using the tokenizer’s decode method.

However, you must provide the access token if you want to use a gated model with the above code. The way to set up the access token is to use some environment variables. You can find all environment variables that matter to the transformers library in the documentation; the most important ones are:

HF_TOKEN: The access token.HF_HOME: The directory to store the cached models.

You should either set this as environment variables before you run your script or do it like the following:

|

import os os.environ[“HF_TOKEN”] = “hf_YourTokenHere” os.environ[“HF_HOME”] = “~/.cache/huggingface”

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = “meta-llama/Llama-3.2-1B” tokenizer = AutoTokenizer.from_pretrained(model_id) model = AutoModelForCausalLM.from_pretrained(model_id) input_ids = tokenizer(“Hello, world!”, return_tensors=“pt”) with torch.no_grad(): outputs = model(**input_ids) output_tokens = outputs.logits.argmax(dim=–1) output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True) print(output_text) |

Note that environment variables should be set to os.environ before you import the transformers library. The model used above, meta-llama/Llama-3.2-1B, is an example of a gated model that you need to request for access and authenticate yourself with an access token before using it.

Pipeline and Tasks

If the pre-trained model can help you work on a text generation task, why would you care about the details, such as the tokenizer and the logit output? Therefore, the transformers library provides a pipeline function to perform different tasks with a pre-trained model, hiding all such details. Below is an example of using the pipeline function to perform a sentiment analysis task:

|

from transformers import pipeline

model_id = “distilbert-base-uncased-finetuned-sst-2-english” classifier = pipeline(“sentiment-analysis”, model=model_id) result = classifier(“Machine Learning Mastery is a great website for learning machine learning.”) print(result) |

The pipeline function will create the model and tokenizer, load them with the pre-trained weights, and run the task you specified. All in one line of code. You don’t need to care about how the different components work together.

The first argument to the pipeline function is the task name. Only a few tasks are supported by the library, some of which are related to image and audio processing. The most common ones in the domain of text processing are:

text-generation: To generate text in auto-complete style. This is probably the most commonly used task.sentiment-analysis: Sentiment analysis, usually to predict “positive” or “negative” sentiment for a given text. The tasktext-classificationis the same as this task.zero-shot-classification: Zero-shot classification. That is, provide a sentence and a set of labels to the model and ask the model to predict the label that best describes the sentence.question-answering: Question answering. This is not to be confused with thetext-generationtask, which can generate answers to open-ended questions. This generates the answer for a given question based on the provided context.

You can launch a pipeline with just the task name. All tasks will have a default model. If you prefer to use a different model, you can specify the model ID, as in the example above.

If you’re interested, you can investigate the pipeline object to see what kind of models it is using. For example, the classifier object above uses a DistilBertForSequenceClassification model. You can check it using the following:

|

... print(classifier.model) print(classifier.tokenizer) |

The output will be:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

DistilBertForSequenceClassification( (distilbert): DistilBertModel( (embeddings): Embeddings( (word_embeddings): Embedding(30522, 768, padding_idx=0) (position_embeddings): Embedding(512, 768) (LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True) (dropout): Dropout(p=0.1, inplace=False) ) (transformer): Transformer( (layer): ModuleList( (0-5): 6 x TransformerBlock( (attention): DistilBertSdpaAttention( (dropout): Dropout(p=0.1, inplace=False) (q_lin): Linear(in_features=768, out_features=768, bias=True) (k_lin): Linear(in_features=768, out_features=768, bias=True) (v_lin): Linear(in_features=768, out_features=768, bias=True) (out_lin): Linear(in_features=768, out_features=768, bias=True) ) (sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True) (ffn): FFN( (dropout): Dropout(p=0.1, inplace=False) (lin1): Linear(in_features=768, out_features=3072, bias=True) (lin2): Linear(in_features=3072, out_features=768, bias=True) (activation): GELUActivation() ) (output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True) ) ) ) ) (pre_classifier): Linear(in_features=768, out_features=768, bias=True) (classifier): Linear(in_features=768, out_features=2, bias=True) (dropout): Dropout(p=0.2, inplace=False) ) DistilBertTokenizerFast(name_or_path=”distilbert-base-uncased-finetuned-sst-2-english”, vocab_size=30522, model_max_length=512, is_fast=True, padding_side=”right”, truncation_side=”right”, special_tokens={‘unk_token’: ‘[UNK]’, ‘sep_token’: ‘[SEP]’, ‘pad_token’: ‘[PAD]’, ‘cls_token’: ‘[CLS]’, ‘mask_token’: ‘[MASK]’}, clean_up_tokenization_spaces=True, added_tokens_decoder={ 0: AddedToken(“[PAD]”, rstrip=False, lstrip=False, single_word=False, normalized=False, special=True), 100: AddedToken(“[UNK]”, rstrip=False, lstrip=False, single_word=False, normalized=False, special=True), 101: AddedToken(“[CLS]”, rstrip=False, lstrip=False, single_word=False, normalized=False, special=True), 102: AddedToken(“[SEP]”, rstrip=False, lstrip=False, single_word=False, normalized=False, special=True), 103: AddedToken(“[MASK]”, rstrip=False, lstrip=False, single_word=False, normalized=False, special=True), } ) |

You can find from the above output that the model is DistilBertForSequenceClassification and the tokenizer is DistilBertTokenizerFast. You can indeed create the model and tokenizer directly using the code below:

|

from transformers import DistilBertForSequenceClassification, DistilBertTokenizerFast

model_id = “distilbert-base-uncased-finetuned-sst-2-english” model = DistilBertForSequenceClassification.from_pretrained(model_id) tokenizer = DistilBertTokenizerFast.from_pretrained(model_id) |

However, you would need to process the input text and run the model yourself. In this aspect, the pipeline function is a convenience.

Further Reading

Below are some of the articles that you may find useful.

Summary

In this article, you have learned how to use the transformers library to load a pre-trained model and use it to perform a task. In particular, you learned:

- How to use the transformers library to create a model and use it to perform a task.

- Using the

pipelinefunction to perform different tasks with a pre-trained model. - How to investigate the pipeline object to see what kind of models it is using.