Image by Author | Canva & DALL-E

Imagine setting up a powerful language model on your local machine. It runs smoothly, processes queries fast, and doesn’t rely on expensive cloud services. But there’s a catch. You can only access it from that one device. What if you want to use it from your laptop in another room? Or share it with a friend?

Running LLMs locally is becoming more common, but making them accessible across multiple devices isn’t always straightforward. This guide will walk you through a seamless way to enable remote access to your local LLMs using Tailscale, Open WebUI, and Ollama. By the end, you’ll be able to interact with your model from anywhere, securely and effortlessly. Let’s start!

Why To Access Local LLMs Remotely?

LLMs confined to a single machine limit flexibility. Remote access allows you to:

- Interact with your LLM from any device

- Avoid running large models on weaker hardware

- Maintain control over your data and processing

Tools Required

- Tailscale: Secure VPN for device connectivity

- Docker: Containerization platform

- Open WebUI: Web-based interface for LLMs

- Ollama: Manages local LLMs

Step 1: Install & Configure Tailscale

- Download and install Tailscale on your local machine.

- Sign in with your Google, Microsoft, or GitHub account.

- Start Tailscale.

- If you’re using macOS, follow these steps to run Tailscale from the terminal. Otherwise, you can skip this section.

- Run the following command in the cmd/terminal:

This command makes sure that your device is connected to the Tailscale network.

Step 2: Install and Run Ollama

- Install Ollama on your local machine.

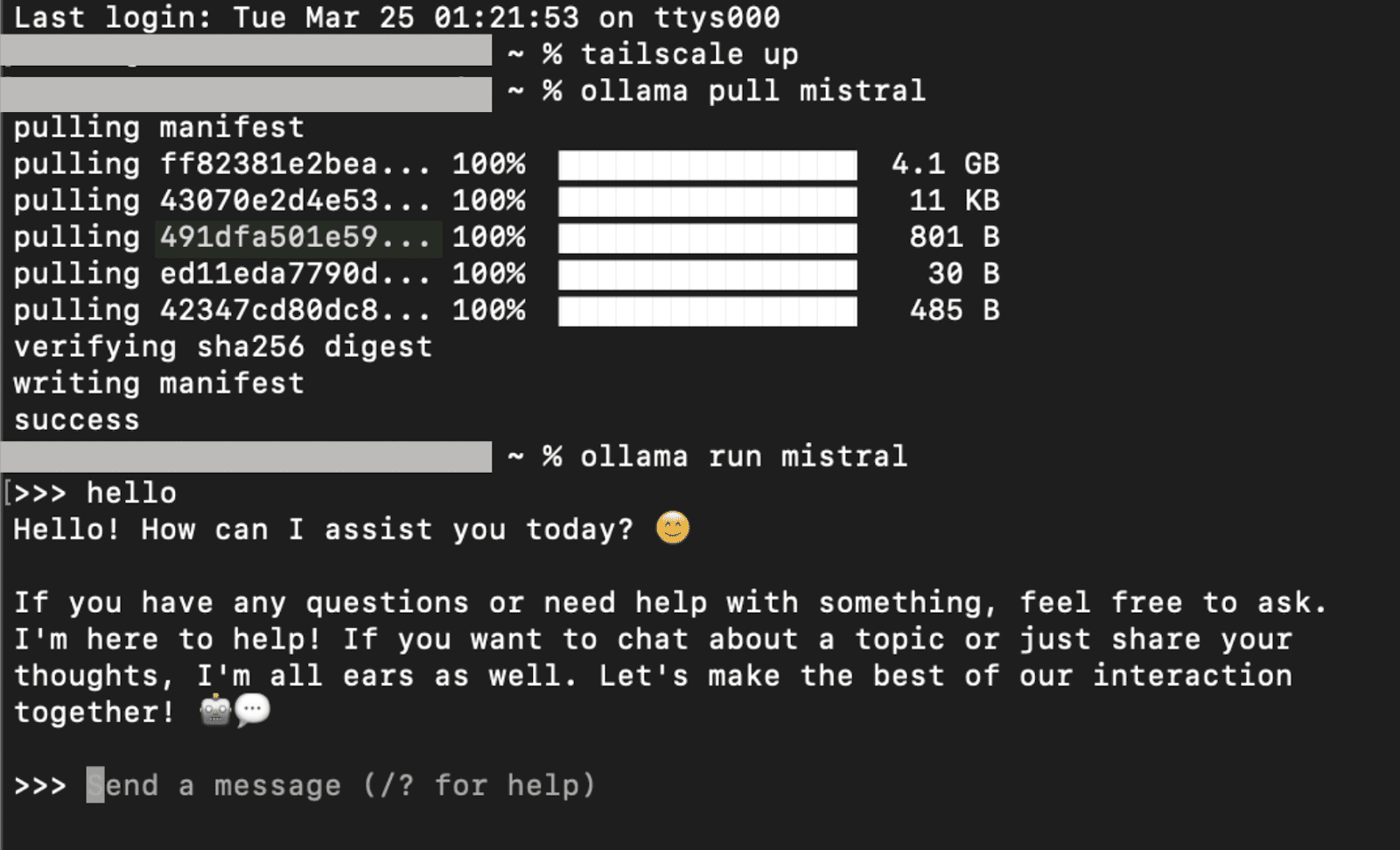

- Load a model by using the following command in the terminal/cmd:

- Run the model by using the following command:

For example, I am using mistral, so I will write:

In my case, it will be:

You’ll see the Mistral model running, and you can interact with it as shown:

To exit, simply type /bye.

Step 3: Install Docker & Set Up Open WebUI

- First, you need to install the docker desktop application on your local machine.

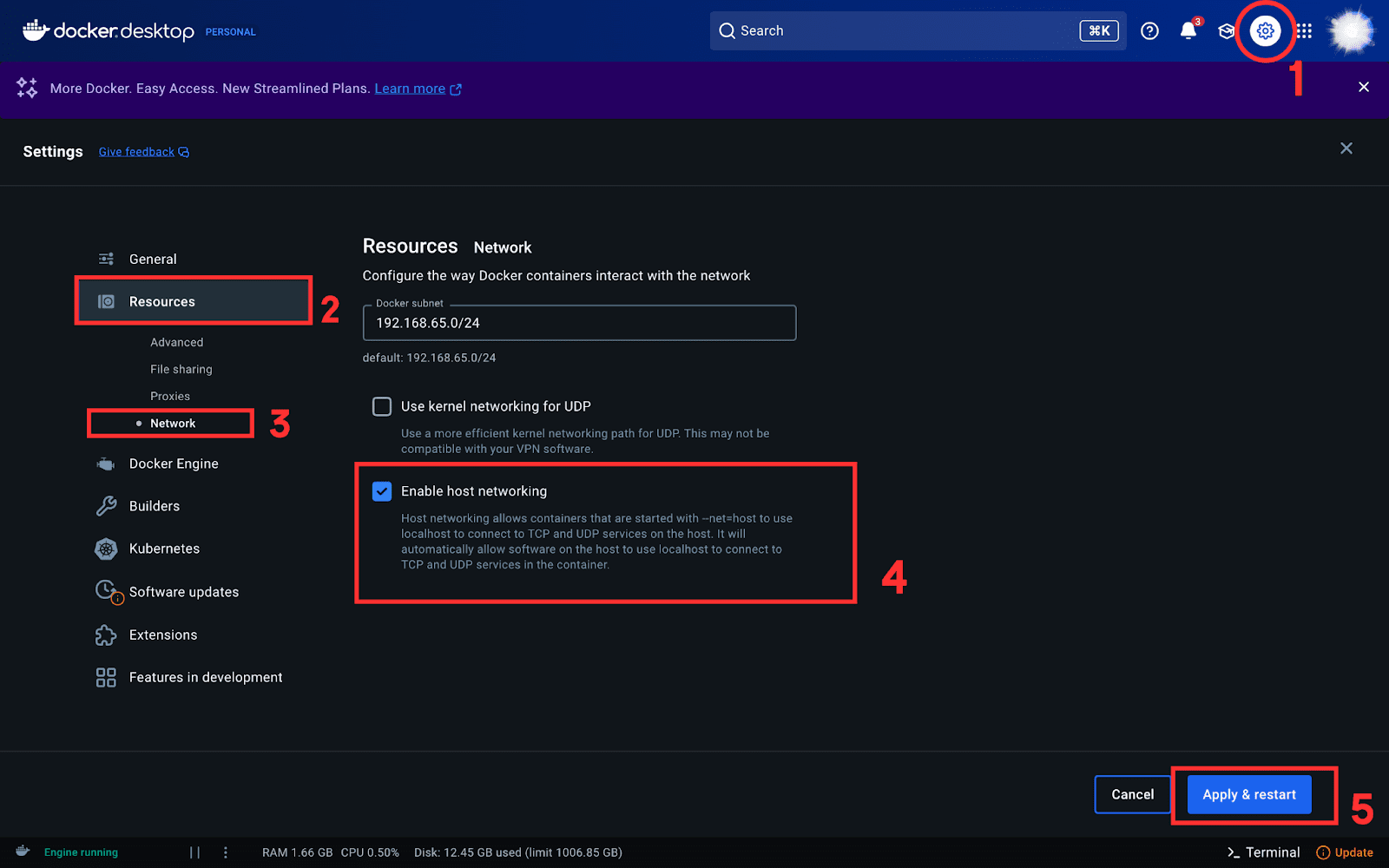

- The next step is to enable host networking. For that purpose, navigate to the Resources tab in Settings, and then under Network select Enable host networking.

- Now, run the following command in the terminal/cmd:

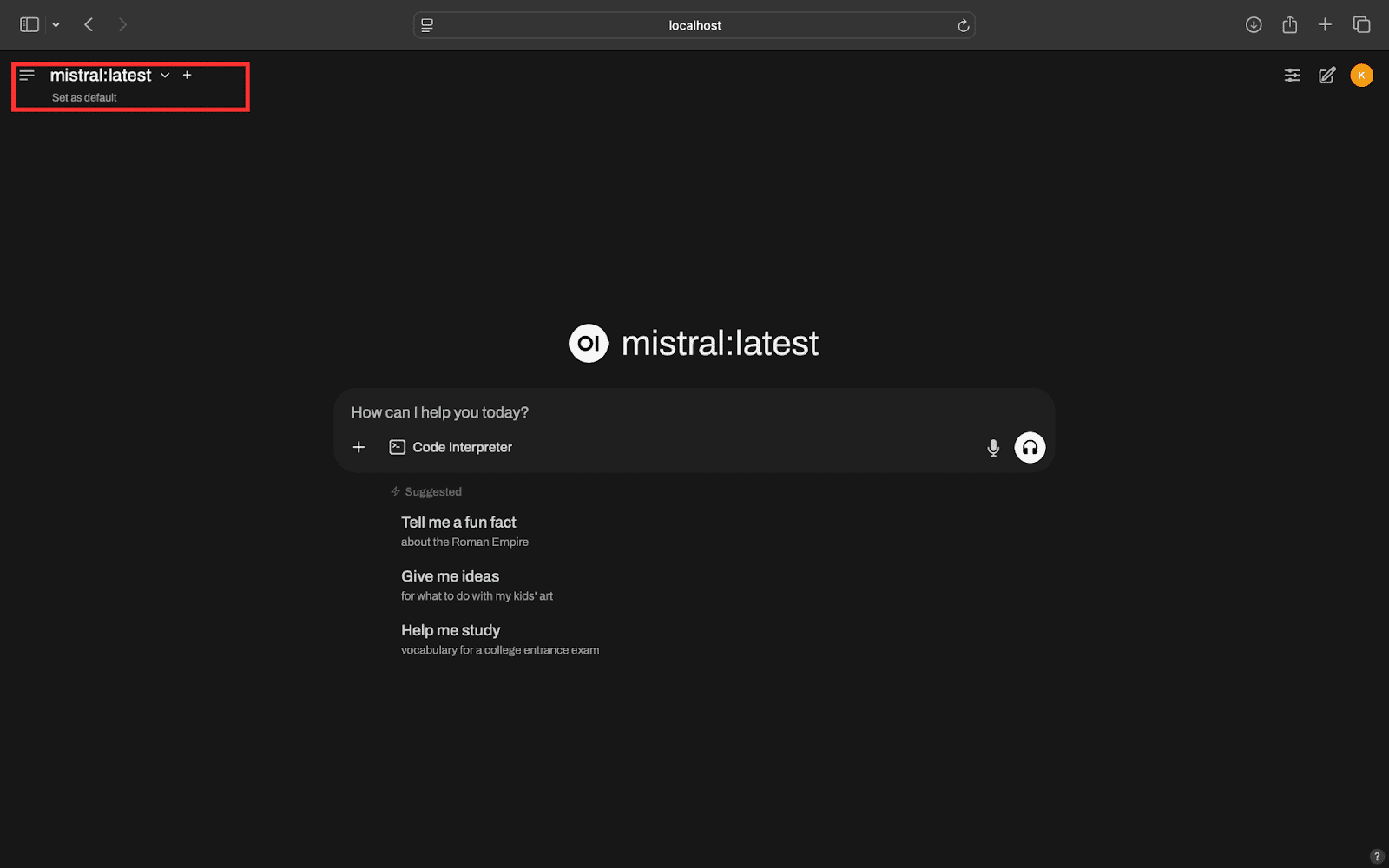

- Access Open WebUI by visiting http://localhost:8080.This will open Open WebUI, where you can interact with your local LLM/s. After authenticating yourself, you will see a screen similar to the one below:

docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:main

This command runs Open WebUI in a Docker container and connects it to Ollama.

If you have multiple LLMs loaded via Ollama, you can easily switch between them using the dropdown menu, highlighted in the red rectangle.

Step 4: Access LLMs Remotely

- On your local machine, check the tailnet IP address by running the following command in the terminal/cmd:

- Install tailscale on your remote device by following STEP 1. If you are using an Android/iOS device as the remote device, you can skip the 5th point of STEP 1. Instead, open the Tailscale app and manually verify that the device is connected.

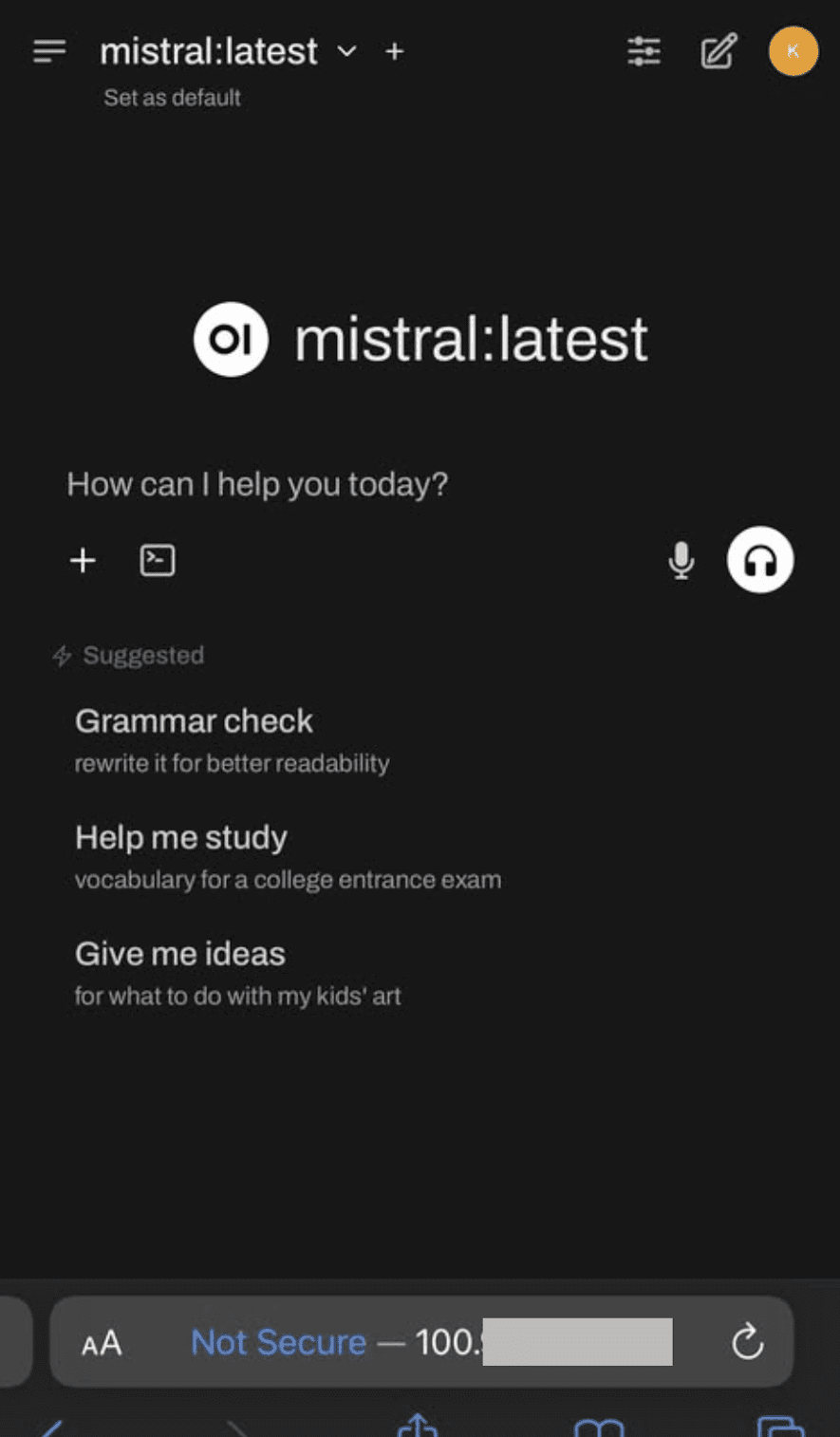

- On your remote device, enter http://<Tailnet-IP>:8080 in a browser to access your local LLM/s. For example, in my case I accessed the local LLM remotely using my mobile and was directed to the following screen:

This will return something like 100.x.x.x (your Tailnet IP).

Wrapping Up

With Tailscale, accessing your local LLM remotely is simple and secure. I’ve laid out a step-by-step guide to clarify the process for you, but if you run into any issues, feel free to drop a comment!

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.