Look out for AI-generated ‘TikDocs’ who exploit the public’s trust in the medical profession to drive sales of sketchy supplements

25 Apr 2025

•

,

3 min. read

Once confined to research labs, generative AI is now available to anyone – including those with ill intentions, who use AI tools not to spark creativity, but to fuel deception instead. Deepfake technology, which can craft remarkably lifelike videos, images and audio, is increasingly becoming a go-to not just for celebrity impersonation stunts or efforts to sway public opinion, but also for identity theft and all manner of scams.

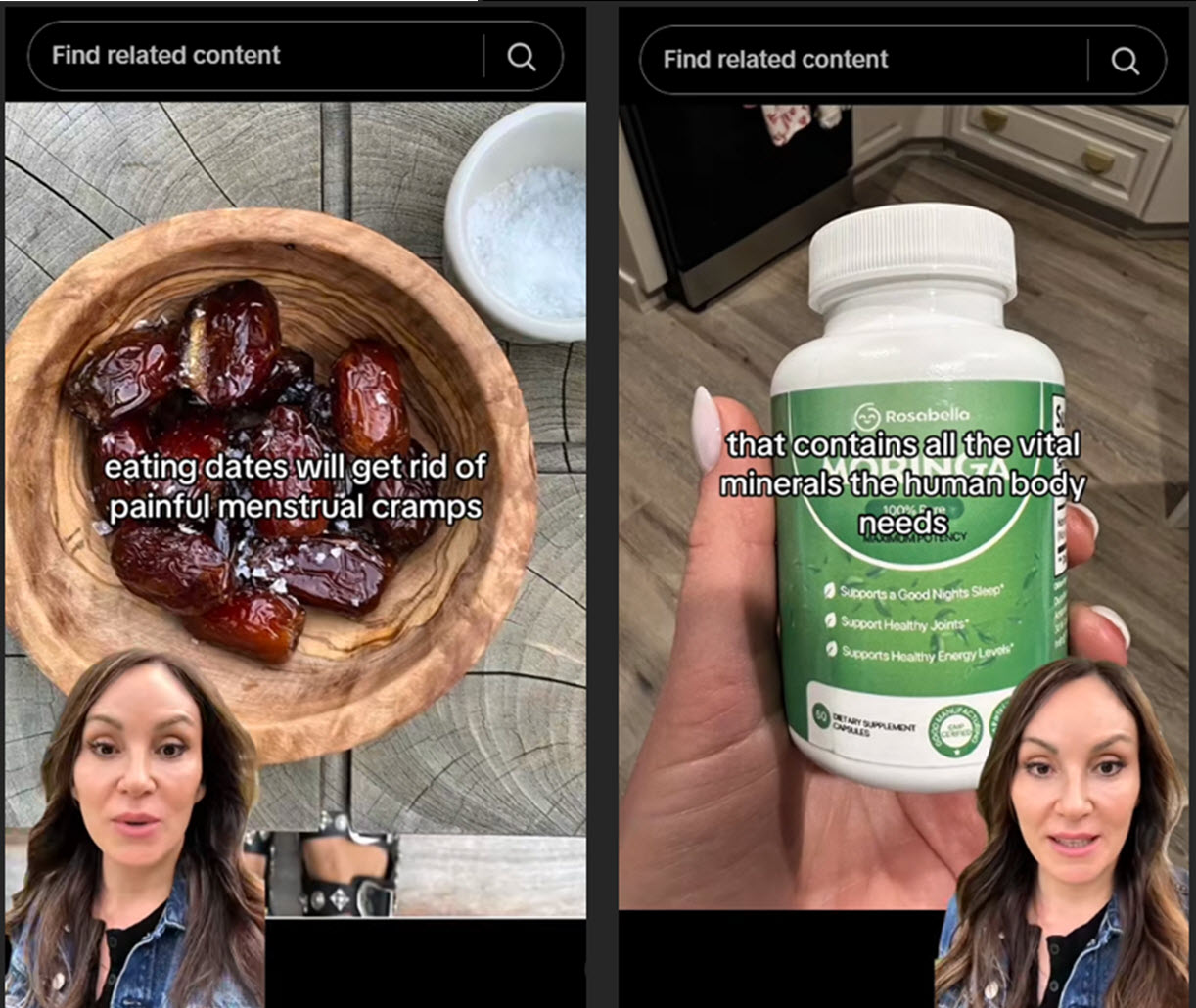

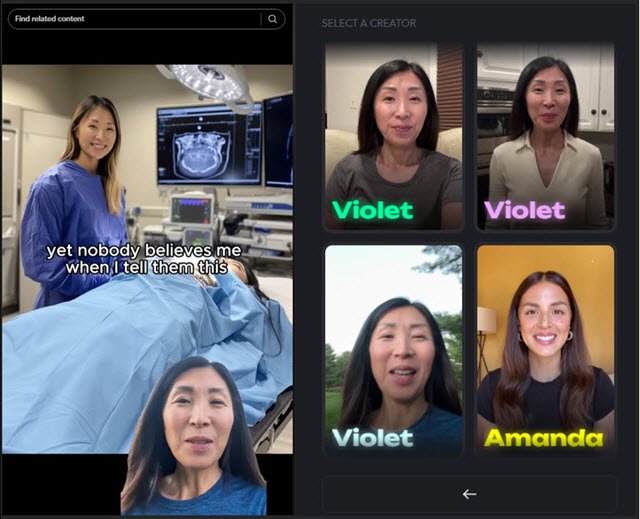

On social media platforms like TikTok and Instagram, the reach of deepfakes, along with their potential for harm, can be especially staggering. ESET researchers in Latin America recently came across a campaign on TikTok and Instagram where AI-generated avatars posed as gynecologists, dietitians and other health professionals to promote supplements and wellness products. These videos, often highly polished and persuasive, disguise sales pitches as medical advice, duping the unwary into making questionable and potentially outright risky purchases.

Anatomy of a deception

Each video follows a similar script: a talking avatar, often tucked into a corner of the screen, delivers health or beauty tips. Dispensed with an air of authority, the advice leans heavily on “natural” remedies, nudging viewers toward specific products for sale. By cloaking their pitches in the guise of expert recommendations, these deepfakes exploit trust in the medical profession to drive sales, a tactic that is as unethical as it is effective.

In one case, the “doctor” touts a “natural extract” as a superior alternative to Ozempic, the drug celebrated for aiding weight loss. The video promises dramatic results and directs you to an Amazon page, where the product is described as “relaxation drops” or “anti-swelling aids”, with no connection to the hyped benefits.

Other videos increase the stakes further, pushing unapproved drugs or fake cures for serious illnesses, sometimes even hijacking the likeness of real, well-known doctors.

AI to the “rescue”

The videos are created with legitimate AI tools that allow anyone to submit short footage and transform it into a polished avatar. While this is a boon for influencers looking to scale their output, this same technology can be co-opted for misleading claims and deception – in other words, what may work as a marketing gimmick quickly morphs into a mechanism for spreading falsehoods.

We spotted more than 20 TikTok and Instagram accounts using deepfake doctors to push their products. One, posing as a gynecologist with 13 years of experience under her belt, was traced directly to the app’s avatar library. While such misuse violates the terms and conditions of common AI tools, it also highlights how easily they can be weaponized.

Ultimately, this may not be “just” about worthless supplements. The consequences can be more dire, as these deepfakes can erode confidence in online health advice, promoting harmful “remedies” and delaying proper treatment.

Keeping fake doctors at bay

As AI becomes more accessible, spotting these fakes becomes trickier, posing a broader challenge even for tech-savvy people. That said, here are a few signs that can help you spot deepfake videos:

- mismatched lip movements that don’t sync with the audio or for facial expressions that feel stiff and unnatural,

- visual glitches, like blurred edges or sudden lighting shifts, also often betray the fakery,

- a robotic or overly polished voice is another red flag,

- also, check the account itself: new profiles with few followers or no history raise suspicion,

- beware of hyperbolic claims, like “miracle cures”, “guaranteed results” and “doctors hate this trick”, especially if they lack credible sources,

- always verify claims with trusted medical resources, avoid sharing suspect videos, and report misleading content to the platform.

As AI tools continue to advance, distinguishing between authentic and fabricated content will become harder rather than easier. This threat underscores the importance of developing both technological safeguards and improving our collective digital literacy that will help protect us from misinformation and scams that could impact our health and financial well-being.