Image by Author | Canva

Whenever you would think of doing some Natural Language task, the first step you would most likely need to take is the tokenization of the text you are using. This is very important and the performance of downstream tasks greatly depends on it. We can easily find tokenizers online that can tokenize English language datasets. But what if you want to tokenize a non-English language data. What if you do not find any tokenizer for the specific type of data you are using? How would you tokenize it? Well, this article explains exactly that.

Our Top 3 Course Recommendations

![]() 1. Google Cybersecurity Certificate – Get on the fast track to a career in cybersecurity.

1. Google Cybersecurity Certificate – Get on the fast track to a career in cybersecurity.

![]() 2. Google Data Analytics Professional Certificate – Up your data analytics game

2. Google Data Analytics Professional Certificate – Up your data analytics game

![]() 3. Google IT Support Professional Certificate – Support your organization in IT

3. Google IT Support Professional Certificate – Support your organization in IT

We will tell you everything you need to know to train your own custom tokenizer.

What is Tokenization and Why is it Important?

The tokenization process takes a body of text and breaks it into pieces, or tokens, which can then be transformed into a format the model can work with. In this case, the model can only process numbers, so the tokens are converted into numbers. This process is critical because it changes raw content into a structured format that models can understand and analyze. Without tokenization, we would have no way to pass text to machine learning models as these models can only understand numbers and not strings of texts directly.

Why Need a Custom Tokenizer?

Training tokenizers from scratch is particularly important when you are working with non-English languages or specific domains. Standard pretrained tokenizers may not effectively handle the unique characteristics, vocabulary, and syntax of different languages or specialized characters. A new tokenizer can be trained to manage specific language features and terminologies. This leads to better tokenization accuracy and improved model performance. Not only that, custom tokenizers allows us to include domain-specific tokens and the handling of rare or out-of-vocabulary words. This improves the effectiveness of NLP models in applications involving many languages.

Now you must be thinking that you know tokenization is important and that you may need to train your own tokenizer. But how would you do that? Now that you know the need and importance of training your own tokenizer, we will explain step by step how to do this.

Step-by-Step Process to Train a Custom Tokenizer

Step 1: Install the Required Libraries

First, ensure you have the necessary libraries installed. You can install the Hugging Face transformers and datasets libraries using pip:

pip install transformers datasets

Step 2: Prepare Your Dataset

To train a tokenizer, you’ll need a text dataset in the target language. Hugging Face’s datasets library offers a convenient way to load and process datasets.

from datasets import load_dataset

# Load a dataset

dataset = load_dataset('oscar', 'unshuffled_deduplicated_ur)

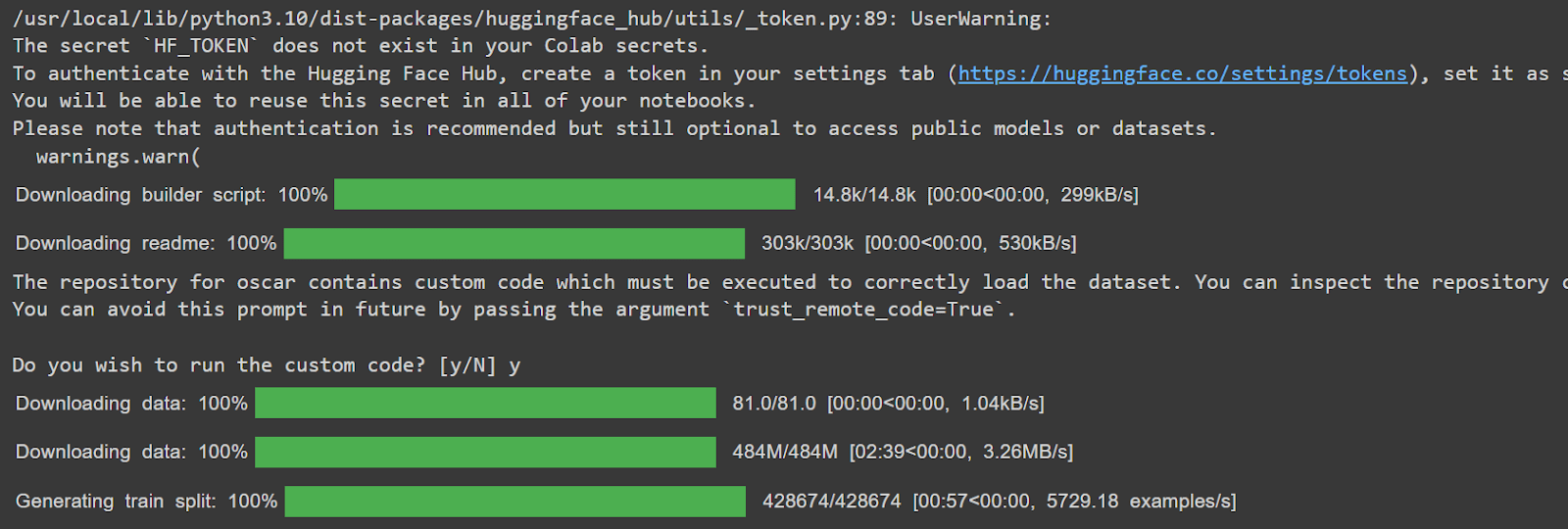

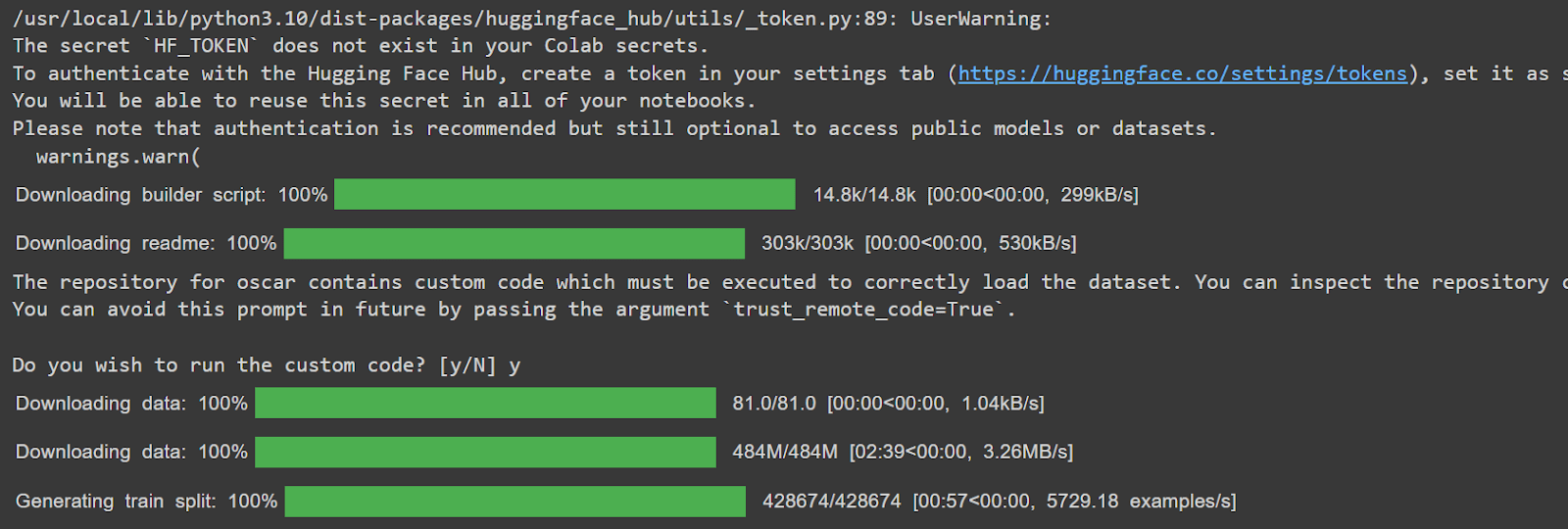

Output:

Note that you can load dataset of any language in this step. One example of our Urdu dataset looks like this:

موضوعات کی ترتیب بلحاظ: آخری پیغام کا وقت موضوع شامل کرنے کا وقت ٹائٹل (حروف تہجی کے لحاظ سے) جوابات کی تعداد مناظر پسند کردہ پیغامات تلاش کریں.

Step 3: Initialize a Tokenizer

Hugging Face provides several tokenizer models. I will use the BPE tokenizer from the hugging face library. This is the exact same algorithm which GPT-3 and GPT-4 tokenizers use. But you can still choose a different tokenizer model based on your needs.

from tokenizers import ByteLevelBPETokenizer

# Initialize a Byte-Pair Encoding(BPE) tokenizer

tokenizer = ByteLevelBPETokenizer()

Step 4: Train the Tokenizer

Train the tokenizer on your dataset. Ensure the dataset is preprocessed and formatted correctly. You can specify various parameters such as vocab_size, min_frequency, and special_tokens.

# Prepare the dataset for training the tokenizer

def batch_iterator(dataset, batch_size=1000):

for i in range(0, len(dataset), batch_size):

yield dataset[i: i + batch_size]

# Train the tokenizer

tokenizer.train_from_iterator(batch_iterator(dataset['text']), vocab_size=30_000, min_frequency=2, special_tokens=[

"<s>", "<pad>", "</s>", "<unk>", "<mask>",

])

Step 5: Save the Tokenizer

After training, save the tokenizer to disk. This allows you to load and use it later.

# Save the tokenizer

import os

# Create the directory if it does not exist

output_dir="my_tokenizer"

os.makedirs(output_dir, exist_ok=True)

tokenizer.save_model('my_tokenizer')

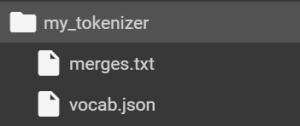

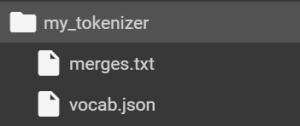

Replace path_to_save_tokenizer with the desired path to save the tokenizer files. Running this code would save two files, namely:

Step 6: Load and Use the Tokenizer

You can load the tokenizer for future use and tokenize texts in your target language.

from transformers import RobertaTokenizerFast

# Load the tokenizer

tokenizer = RobertaTokenizerFast.from_pretrained('path_to_save_tokenizer')

# Tokenize a sample text

text = "عرصہ ہوچکا وائرلیس چارجنگ اپنا وجود رکھتی ہے لیکن اسمارٹ فونز نے اسے اختیار کرنے میں کافی وقت لیا۔ یہ حقیقت ہے کہ جب پہلی بار وائرلیس چارجنگ آئی تھی تو کچھ مسائل تھے لیکن ٹیکنالوجی کے بہتر ہوتے ہوتے اب زیادہ تر مسائل ختم ہو چکے ہیں۔"

encoded_input = tokenizer(text)

print(encoded_input)

The output of this cell would be:

'input_ids': [0, 153, 122, 153, 114, 153, 118, 156, 228, 225, 156, 228, 154, 235, 155, 233, 155, 107, 153, 105, 225, 154, 235, 153, 105, 153, 104, 153, 114, 154, 231, 156, 239, 153, 116, 225, 155, 233, 153, 105, 153, 114, 153, 110, 154, 233, 155, 112, 225, 153, 105, 154, 127, 154, 233, 153, 105, 225, 154, 235, 153, 110, 154, 235, 153, 112, 225, 153, 114, 155, 107, 155, 127, 153, 108, 156, 239, 225, 156, 228, 156, 245, 225, 154, 231, 156, 239, 155, 107, 154, 233, 225, 153, 105, 153, 116, 154, 232, 153, 105, 153, 114, 154, 122, 225, 154, 228, 154, 235, 154, 233, 153, ], 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ]

Wrapping Up

To train your own tokenizer for non-English languages with Hugging Face Transformers, you would have to prepare a dataset, initialize a tokenizer, train it and finally save it for future use. You can create tokenizers tailored to specific languages or domains by following these steps. This will improve the performance of any of the specific NLP task that you might be working on. You are now able to easily train tokenizer on any language. Let us know what you created.

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.