Image by Editor | Midjourney

This tutorial demonstrates how to use Hugging Face’s Datasets library for loading datasets from different sources with just a few lines of code.

Hugging Face Datasets library simplifies the process of loading and processing datasets. It provides a unified interface for thousands of datasets on Hugging Face’s hub. The library also implements various performance metrics for transformer-based model evaluation.

Initial Setup

Certain Python development environments may require installing the Datasets library before importing it.

!pip install datasets

import datasets

Loading a Hugging Face Hub Dataset by Name

Hugging Face hosts a wealth of datasets in its hub. The following function outputs a list of these datasets by name:

from datasets import list_datasets

list_datasets()

Let’s load one of them, namely the emotions dataset for classifying emotions in tweets, by specifying its name:

data = load_dataset("jeffnyman/emotions")

If you wanted to load a dataset you came across while browsing Hugging Face’s website and are unsure what the right naming convention is, click on the “copy” icon beside the dataset name, as shown below:

The dataset is loaded into a DatasetDict object that contains three subsets or folds: train, validation, and test.

DatasetDict(

train: Dataset(

features: ['text', 'label'],

num_rows: 16000

)

validation: Dataset(

features: ['text', 'label'],

num_rows: 2000

)

test: Dataset(

features: ['text', 'label'],

num_rows: 2000

)

)

Each fold is in turn a Dataset object. Using dictionary operations, we can retrieve the training data fold:

train_data = all_data["train"]

The length of this Dataset object indicates the number of training instances (tweets).

Leading to this output:

Getting a single instance by index (e.g. the 4th one) is as easy as mimicking a list operation:

which returns a Python dictionary with the two attributes in the dataset acting as the keys: the input tweet text, and the label indicating the emotion it has been classified with.

'text': 'i am ever feeling nostalgic about the fireplace i will know that it is still on the property',

'label': 2

We can also get simultaneously several consecutive instances by slicing:

This operation returns a single dictionary as before, but now each key has associated a list of values instead of a single value.

'text': ['i didnt feel humiliated', ...],

'label': [0, ...]

Last, to access a single attribute value, we specify two indexes: one for its position and one for the attribute name or key:

Loading Your Own Data

If instead of resorting to Hugging Face datasets hub you want to use your own dataset, the Datasets library also allows you to, by using the same ‘load_dataset()’ function with two arguments: the file format of the dataset to be loaded (such as “csv”, “text”, or “json”) and the path or URL it is located in.

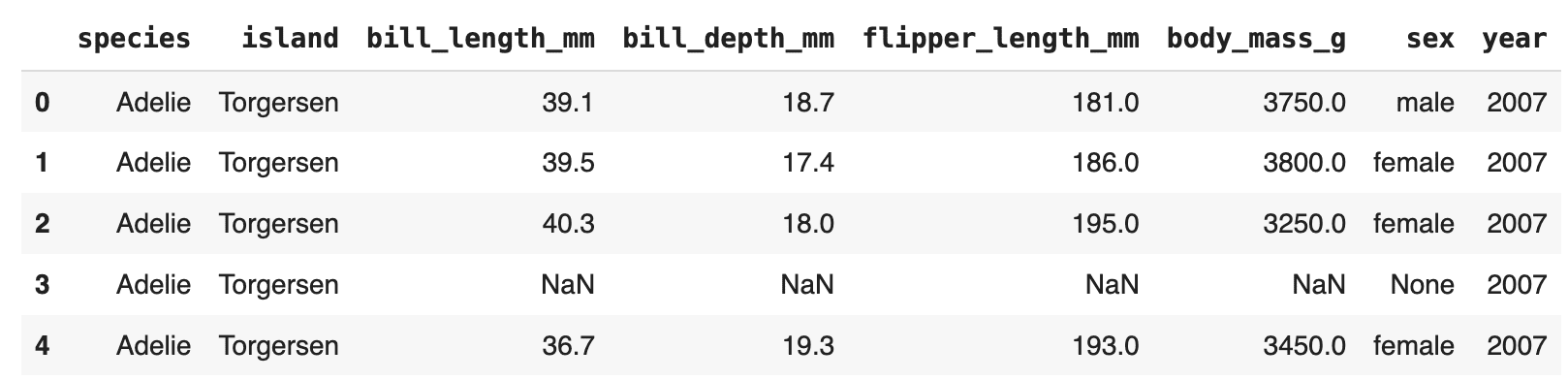

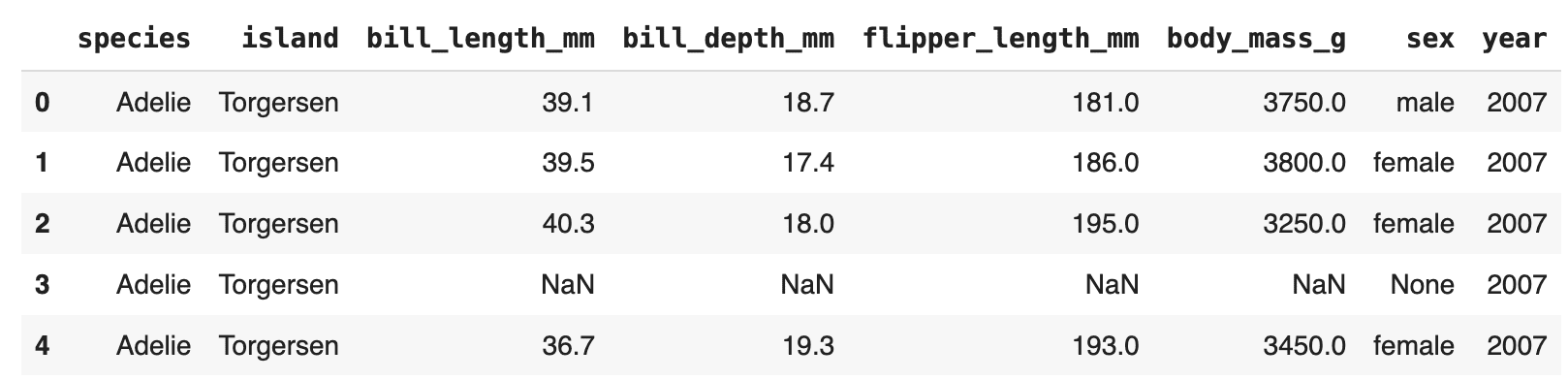

This example loads the Palmer Archipelago Penguins dataset from a public GitHub repository:

url = "https://raw.githubusercontent.com/allisonhorst/palmerpenguins/master/inst/extdata/penguins.csv"

dataset = load_dataset('csv', data_files=url)

Turn Dataset Into Pandas DataFrame

Last but not least, it is sometimes convenient to convert your loaded data into a Pandas DataFrame object, which facilitates data manipulation, analysis, and visualization with the extensive functionality of the Pandas library.

penguins = dataset["train"].to_pandas()

penguins.head()

Now that you have learned how to efficiently load datasets using Hugging Face’s dedicated library, the next step is to leverage them by using Large Language Models (LLMs).

Iván Palomares Carrascosa is a leader, writer, speaker, and adviser in AI, machine learning, deep learning & LLMs. He trains and guides others in harnessing AI in the real world.