Image by Editor | Ideogram

One of the most frequently mentioned AI buzzwords in recent years has been language models, often referred to by the acronym LLMs for large language models, referring to advanced AI systems designed to understand and generate human-like text based on vast datasets. If you arrived here, then you’re probably looking for a concise yet engaging read to be introduced to — or refresher on — language models: what they are, what they can do, and how they work in a nutshell. If so, you are at the right place, so keep reading.

What are Language Models?

A language model is a system that processes, understands, and generates human language. Unlike more “conventional” natural language processing (NLP) models, most of which are designed to solve a particular task often limited to language understanding — such as sentiment analysis or named entity recognition — you should think of a language model as a more advanced form of NLP system trained for acquiring a broader range of “language skills”, including precise coherent generation of responses.

On the other hand, LLMs are — as their name suggests — language models trained on vast datasets (millions to billions of text documents they learn from), and have a substantial architecture: their essence is the same, they differ in their magnitude and the extent of their capabilities.

For simplicity, we will interchangeably use the terms LLM and language model to refer to the same general concept in the remainder of this article.

Tasks LLMs can be broadly categorized into two types: language generation and language understanding. The former tasks focus on creating new text based on prompts (queries formulated by the user and taken as model inputs), while the latter aims at interpreting and extracting meaning from the input text. A “self-sufficient” language model should be able to jointly learn both skills.

Overview of language understanding and generation tasks commonly undertaken by language models

The above diagram classifies a variety of language tasks based on the most intensively required skill (understanding vs. generation). But in practice, most tasks will require a mix of both skills: for instance, translating text requires a deep understanding of the text in a source language before generating the output translation in a target language.

How Do They Work? A Simplified Approach

The transformer architecture is behind most language models and is known for efficiently processing large amounts of text data by parallelizing their processing. The most conventional transformer architecture (depicted below) has the following elements:

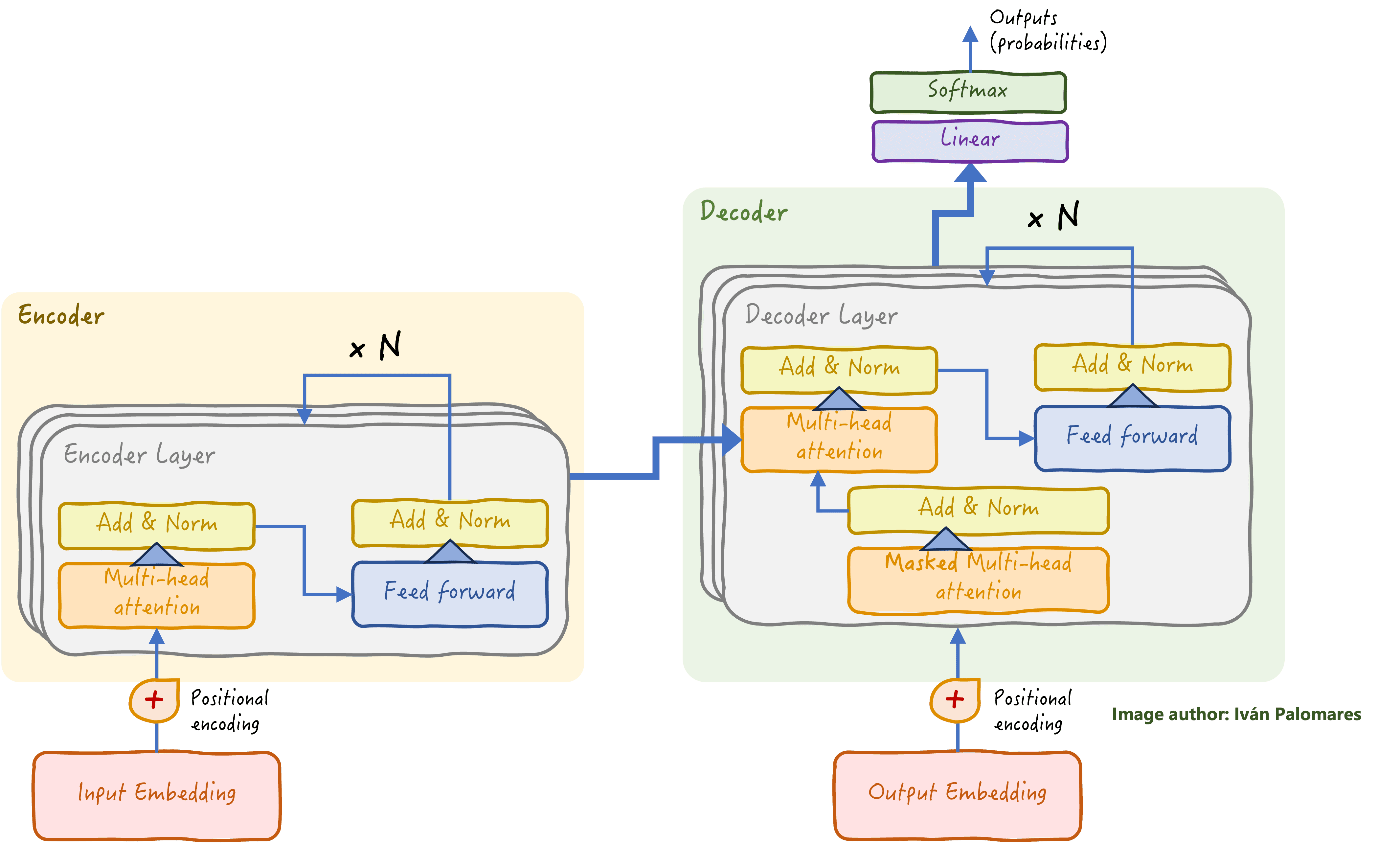

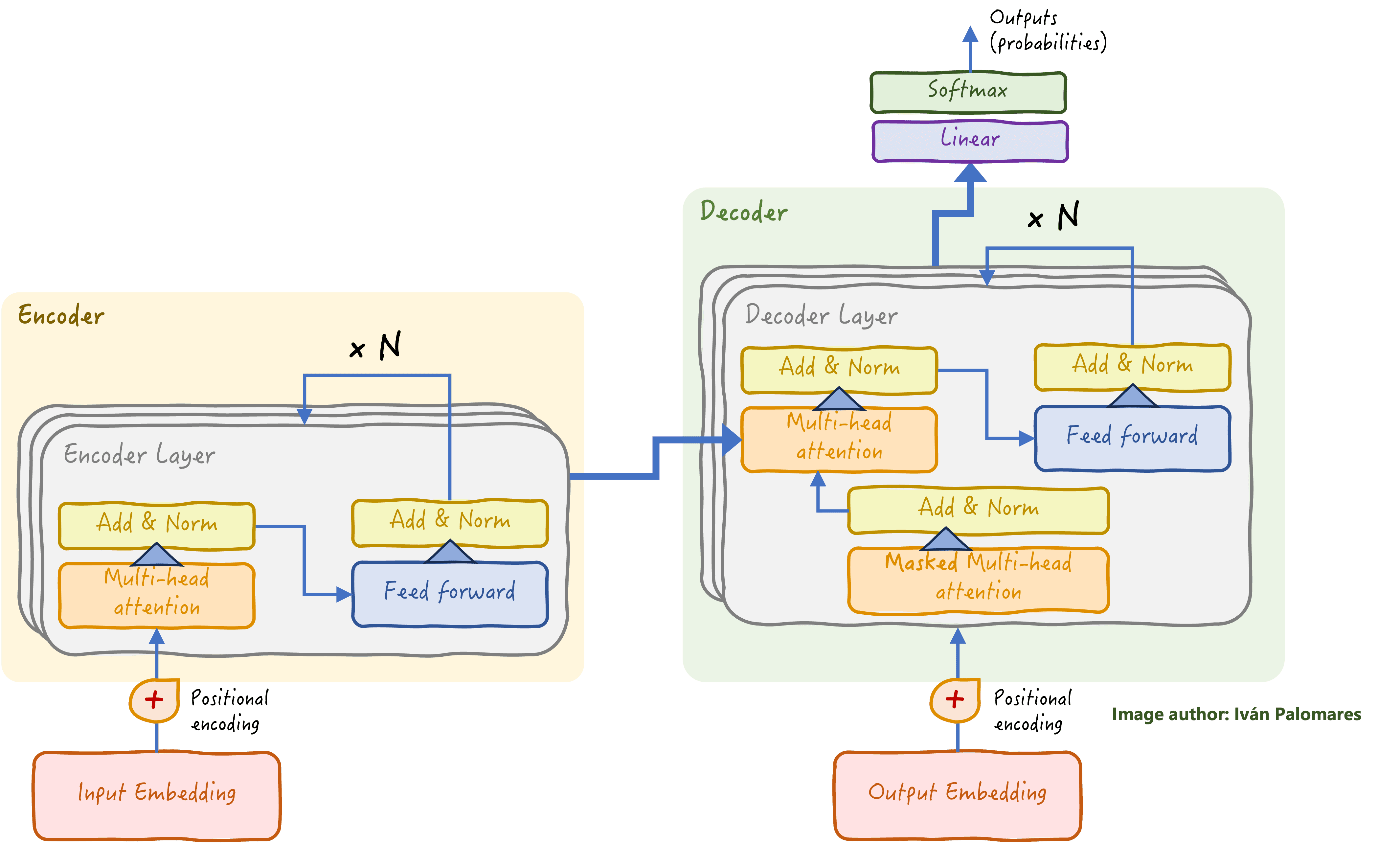

Transformer architecture

- It is divided into an encoder stack and a decoder stack. The encoder is responsible for understanding and extracting patterns from the input data, while the decoder generates text responses based on the encoded information.

- Besides lots of interconnected neural network components, a crucial component of the transformer is its self-attention mechanism, responsible for identifying relationships and long-range dependencies between words in a text, regardless of their position in the text. Much of the success behind language models is owed to this innovative mechanism.

- Language models do not understand human language. They are computer systems after all, and computers only understand numbers. So, how are they so good at performing complex language tasks? They use embeddings to convert words into numerical representations that capture their meaning and context.

- An encoder-decoder transformer architecture ultimately outputs a sequence of words that are generated one by one. Each generated word is the result of a problem called next-word prediction, in which probabilities of all possible words being the next one are calculated, thereby returning the word with the highest probability: these computations take place in the so-called softmax layer at the very end.

Leveraging Language Models in the Real World

To finalize this short overview, here are a few notes to better understand how language models can be deployed and harnessed into the wild, with special mention of some frameworks and tools that help make it possible.

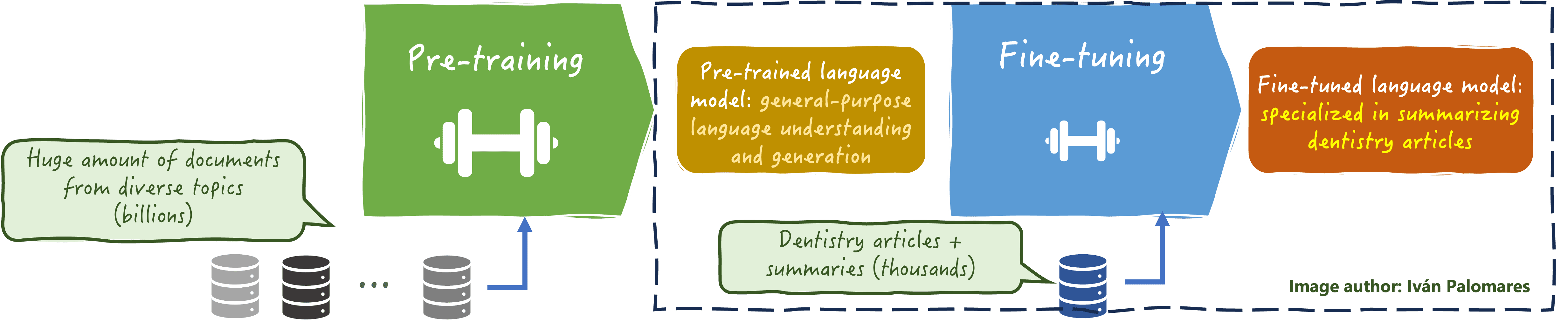

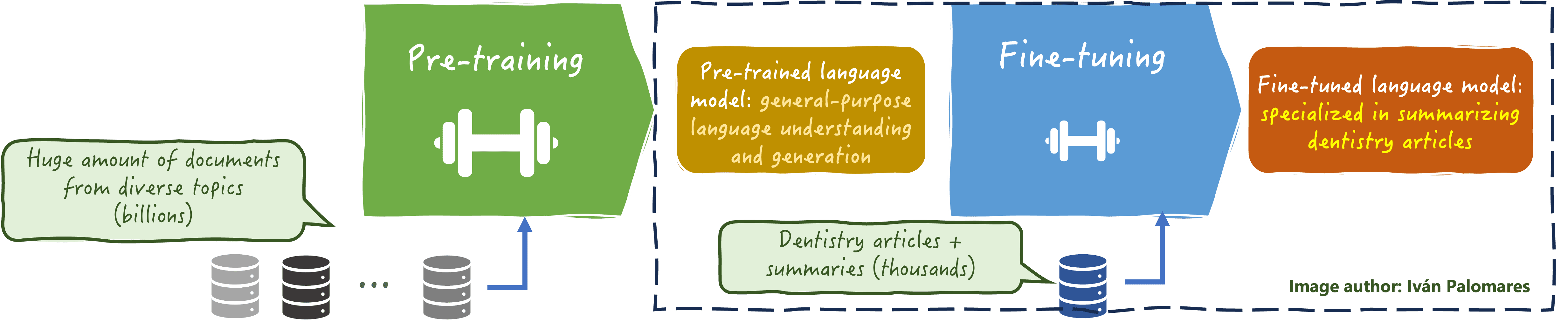

- There are two approaches to train (build) a language model: pre-training and fine-tuning. Pre-training is like building the model from scratch, passing in large datasets to help it acquire general language knowledge. Meanwhile, fine-tuning only requires a smaller, specialized dataset to adapt the model to specific tasks or domains. Platforms like Hugging Face provide a collection of pre-trained language models that can be downloaded and fine-tuned on your data to have them specialize in a particular problem and domain.

Fine-tuning a pre-trained language model for learning to summarize dentistry papers

- Whether pre-trained from scratch or just fine-tuned, deploying an LLM requires careful management to ensure it performs well in real-world scenarios. LLMOps (LLM Operations) provides a framework for scaling, monitoring, and maintaining deployed LLMs, facilitating their integration across systems and workflows.

- Building language model applications today is easier thanks to powerful tools and frameworks like Langchain, which simplifies the development of LLM-based applications, and LlamaIndex, which offers Retrieval-Augmented Generation (RAG) capabilities to enhance the performance of language models by integrating external data sources like document databases to provide more truthful and accurate responses.

Iván Palomares Carrascosa is a leader, writer, speaker, and adviser in AI, machine learning, deep learning & LLMs. He trains and guides others in harnessing AI in the real world.