Imagine you have already integrated the OpenAI API into your system, but now you want to expand your capabilities by using Google Gemini and Anthropic APIs as well. Will you create and manage separate accounts for each provider, juggle multiple SDKs, and make your code even more complex? Or would you prefer a unified solution that allows you to access any large language model (LLM) from any provider with just a single line of code?

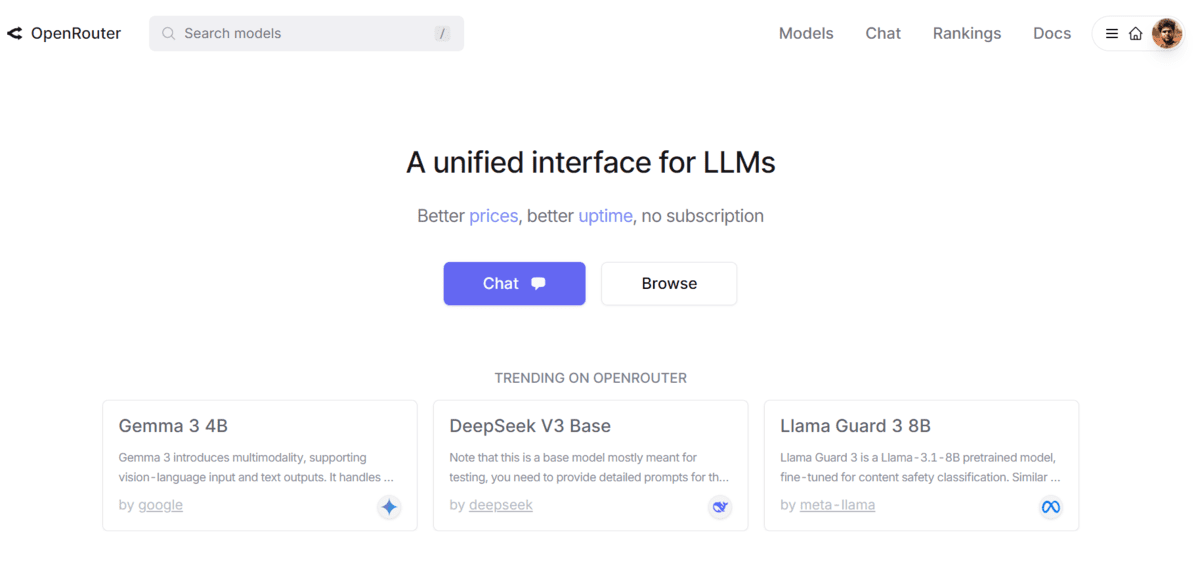

In this tutorial, we will explore OpenRouter, a platform designed to simplify the lives of developers by providing a unified interface for accessing multiple AI models. You will learn how to set up OpenRouter, access models using Python’s requests library and the OpenAI client, and discover how OpenRouter can streamline your workflow.

Introducing OpenRouter

OpenRouter is a unified API platform that provides developers with access to a wide array of LLMs from leading AI providers such as OpenAI, Anthropic, Google, Meta, Mistral, and others. It offers a single, standardized interface to integrate these models into applications, simplifying the process of working with multiple AI services. OpenRouter automatically handles fallbacks, ensuring reliability, and selects the most cost-effective options, saving both time and resources. With just a few lines of code, developers can get started using their preferred SDK or framework, making it highly accessible and easy to implement.

Setting up OpenRouter

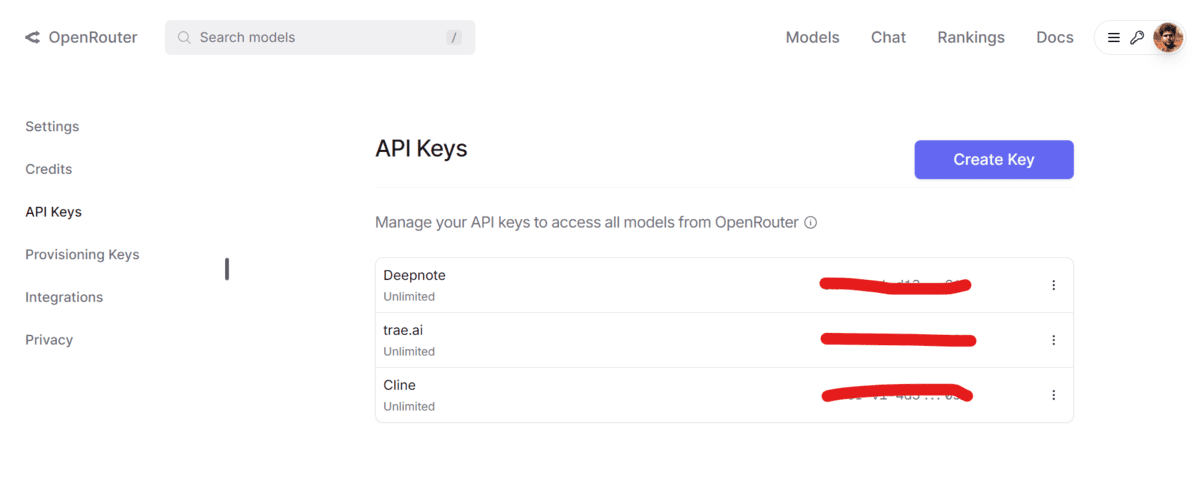

Visit the OpenRouter website and sign up for an account. Once your account is created, navigate to the API section to generate your API Keys.

For security and ease of use, save your API key as an environment variable on your system. This allows you to access it programmatically without hardcoding sensitive information into your scripts.

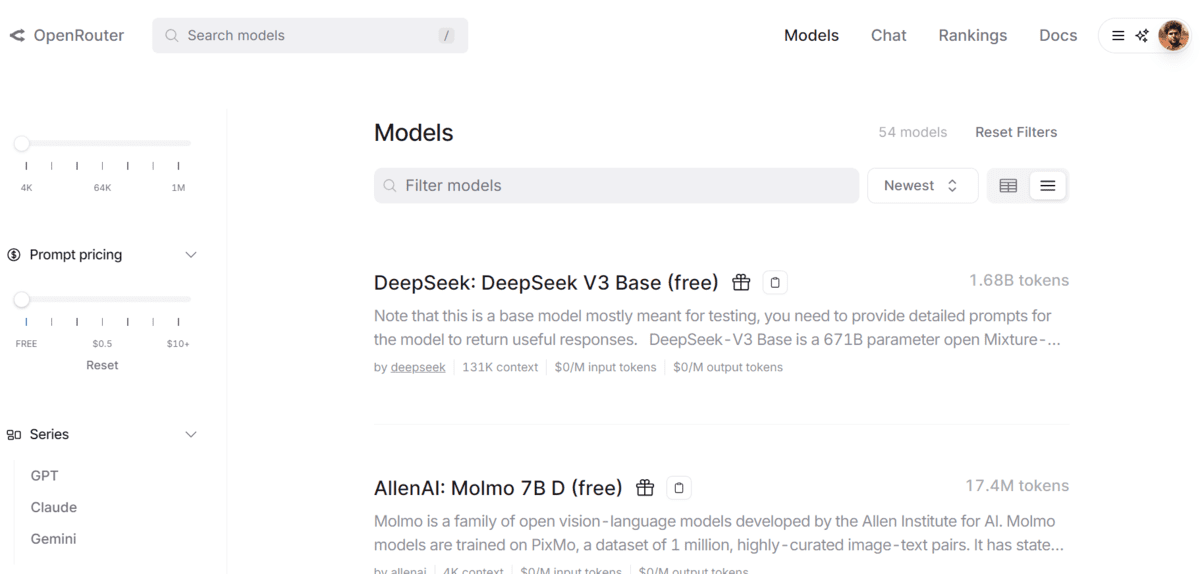

OpenRouter acts as a marketplace for various AI models, allowing you to browse and select from a wide range of options. You can search for models, copy the provided API code, and test them directly in your terminal or application.

Using OpenRouter

You can interact with OpenRouter using Python, the OpenAI client, TypeScript, or even cURL commands. In this section, we will focus on using the Python requests library and the OpenAI client to access OpenRouter’s API and demonstrate how it simplifies the integration process.

Access OpenRouter using Requests

The Python requests library is a simple and effective way to interact with OpenRouter’s API. Below is an example of how to send a request to the OpenRouter server to provide the combination of text and image input to the qwen2.5-vl-72b-instruct model.

from IPython.display import display, Markdown

import requests

import json

import os

API_KEY = os.environ["OPENROUTER"]

response = requests.post(

url="https://openrouter.ai/api/v1/chat/completions",

headers={

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json",

},

data=json.dumps({

"model": "qwen/qwen2.5-vl-72b-instruct:free",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Explain the image."

},

{

"type": "image_url",

"image_url": {

"url": "https://www.familyhandyman.com/wp-content/uploads/2018/02/handcrafted-log-home.jpg"

}

}

]

}

],

})

)

# Parse the JSON response

result = response.json()

# Extract and print the model's response text

output = result["choices"][0]["message"]["content"]

display(Markdown(output))

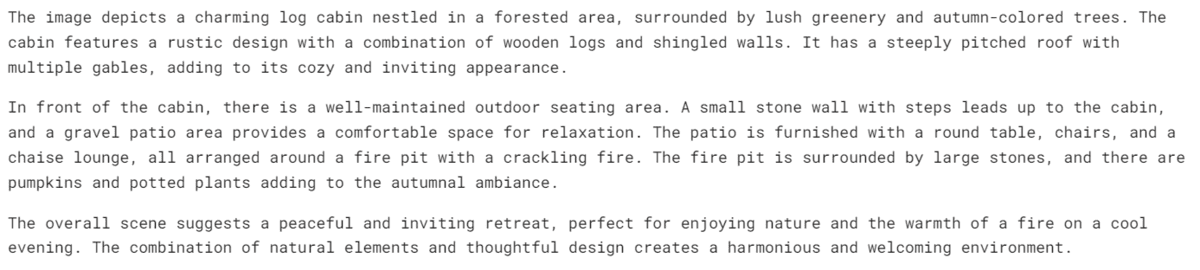

We got a fast and accurate response.

Access OpenRouter using OpenAI Client

If you are already familiar with the OpenAI client, you can use it to access the OpenRouter API. First, install the OpenAI client if you haven’t already:

Then, use the following code to send a request to the OpenRouter server. This time we are using the deepseek-chat-v3-0324 model.

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=os.environ["OPENROUTER"],

)

completion = client.chat.completions.create(

model="deepseek/deepseek-chat-v3-0324:free",

messages=[

{

"role": "user",

"content": "Create beautiful ASCII art."

}

]

)

output = completion.choices[0].message.content

display(Markdown(output))

We successfully accessed the model using the same URL and API key, which means that you can access any model by changing the model name.

OpenRouter Key Features

Here are the key features of OpenRouter at a glance:

1. Unified API for Multiple Models

- Access models from OpenAI, Anthropic, Mistral, Meta, Google, and more — all through a single API endpoint.

- No need to juggle multiple keys or provider-specific SDKs.

2. Model Routing & Failover

- Automatically route requests to available models.

- Supports fallbacks and load-balancing between providers.

- Great for uptime-critical applications.

3. OpenAI-Compatible SDK

- Drop-in replacement: easily switch your existing OpenAI-based codebase to OpenRouter with minimal changes.

- Works with popular Python clients like openai.

4. Transparent, Pay-As-You-Go Pricing

- No markup on inference costs — pass-through pricing from model providers.

- View and compare token pricing across models in one place.

5. Secure API Keys & Rate Control

- Generate API keys with specific permissions, usage limits, or expiration dates.

- Monitor usage via the dashboard.

6. Analytics & Monitoring

- Dashboard gives you insight into usage, latency, and model performance.

- Great for debugging and optimization.

7. Model Customization

- Route traffic by model ID or configure default models.

- Supports custom prompts, templates, and headers.

Final Thoughts

I am genuinely impressed by how simple the setup, payment process, and interface of OpenRouter are, combined with its powerful functionality. It fills a vital gap in the AI ecosystem by acting as a unified marketplace that allows developers to access top-tier models without the hassle of switching APIs or rewriting their code. With just a few adjustments, you can tap into a diverse range of LLMs, optimize both cost and performance, and scale your AI integration more efficiently than ever.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.