Image by Editor | ChatGPT

Introduction

The evolution of large language models (LLMs) into LLM agents is a profound shift in the development of artificial intelligence (AI) applications in 2025. In this article, we explore this evolution — examining how native LLMs developed into sophisticated LLM agents, and the key technological breakthroughs that enabled this transformation.

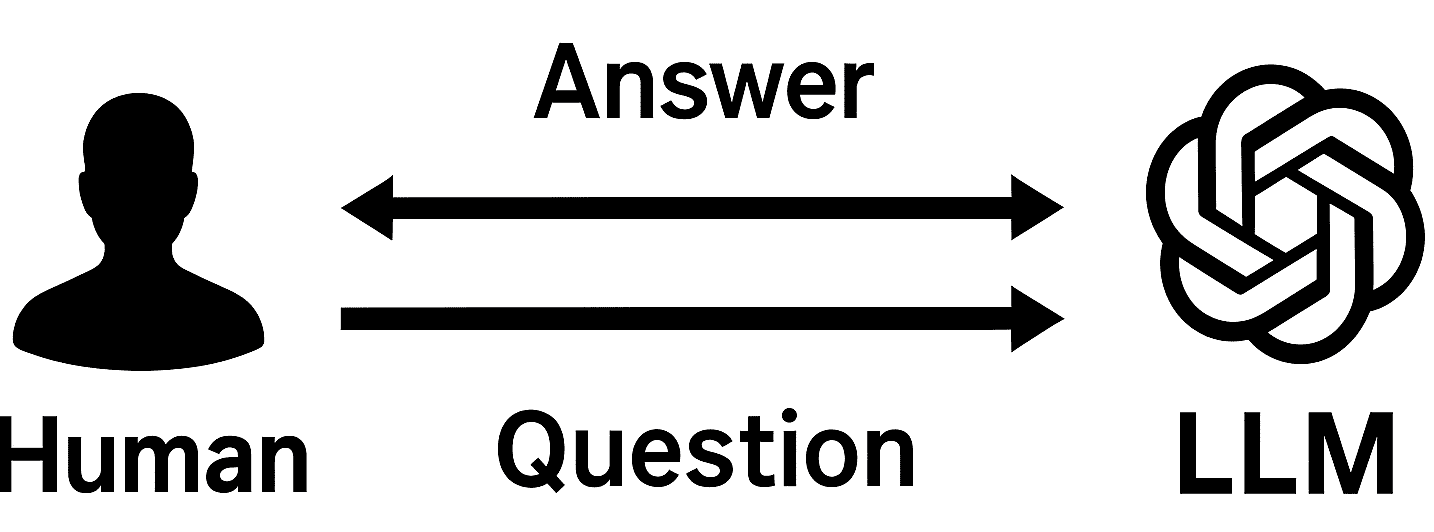

Horizon I: Out-the-Box LLMs

Timeframe: 2018 onwards

Native LLMs were typically treated as “black boxes” that could process user input text and generate output text in a single-call fashion. The earliest native LLMs (like GPT-1), despite their impressive abilities in understanding and generating text, lacked a key element — knowledge cutoff. Native LLMs could only provide answers based on their predetermined knowledge cut-off (also called parameterized knowledge), ie. the data it has learnt during training/pretraining.

This static nature made them limited by their world knowledge, and unable to incorporate new facts or evolving information unless retrained. As training data quickly becomes outdated, native LLMs struggled with temporally recent or out-of-domain queries, often leading to inaccurate or hallucinated outputs. Despite being a significant milestone in AI, these models remained bound by their static knowledge and had no grounding in real-time data.

Native LLMs

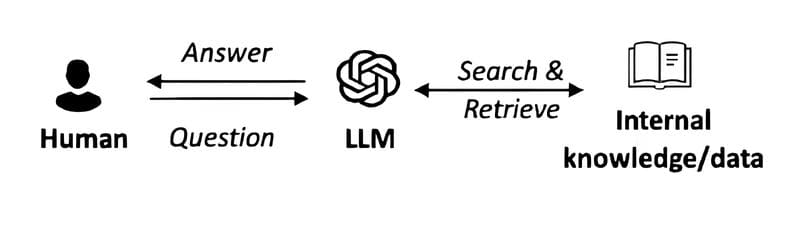

Horizon II: LLMs Leveraging RAG

Timeframe: 2020 onwards

To address the limitations of static knowledge of native LLMs, the concept of retrieval augmented generation (RAG) was introduced in this seminal work in 2020. This architecture marked a turning point by combining external information retrieval with LLM-based text generation.

Though introduced in 2020, RAG achieved newfound public attention in early 2023 shortly after the introduction of ChatGPT when its limitations were quickly discovered. RAG-enhanced LLMs soon became the industry standard for enterprise applications like chatbots, search assistants, and Q&A systems. These systems allowed the model to query external knowledge bases, vector databases, or even live web indexes during inference. This approach meant that LLMs were no longer bound by their training data—as long as the retrieval index contained up-to-date documents, the model could provide timely and grounded responses to previously unanswerable queries.

However, even RAG-enabled LLMs remained passive systems — that is, while they could retrieve relevant information and generate coherent responses, they could not take action autonomously. They could not plan, make decisions, or interact with APIs or tools without explicit human input. Their role was confined to information synthesis, which constrained their effectiveness in interactive, multi-step, or action-driven tasks.

RAG-enhanced LLMs

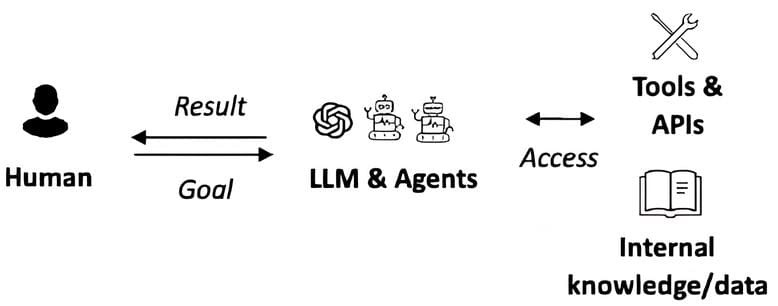

Horizon III: LLM Agents

Timeframe: 2025 onwards

First and foremost, let us first define the term LLM agent:

“LLM agent” refers to an AI system that uses a large language model as its core reasoning engine, but with additional modules that allow it to perceive its environment, plan actions, and execute tasks autonomously.

The emergence of LLM agents represents a dramatic shift from passive systems to goal-driven, autonomous entities. These agents are equipped with long-term memory, multi-step planning, tool usage, and often work in real-time interaction loops. This represents a shift from passive response generation to active problem-solving and execution. Unlike RAG LLMs, which merely responded with grounded information, LLM agents can take actions — run API calls, book meetings, browse the web, or manipulate files and databases.

Moreover, LLM agents usually have continuous self-feedback loops. They can critique their own outputs, revise actions, and optimize behavior based on context—enabling higher response quality, decision reliability, and adaptability.

Some real-world applications of LLM agents include:

- Automating entire workflows in enterprises

- Acting as intelligent customer service representatives

- Conducting market research and drafting multi-step reports autonomously

LLM Agents

The Future of LLM Agents

The era of LLM agents has just begun. As we continue into 2025, more and more mature agentic system architectures are expected — starting with single-agent to multi-agent.

In addition, the future of LLM agents is also expected to be shaped by advancements in multimodality. Some interesting real-world applications include AI tutors that can give spoken explanations, healthcare agents that can analyze reports and patient history, code generation agents that can debug code and assist programmers interactively etc.

The end goal? Fully autonomous, intelligent agents that can collaborate, reason, and execute tasks across industries, bringing us closer to the vision of true artificial general intelligence (AGI).

Lavanya Gupta is a Sr. Applied AI/ML Scientist at JP Morgan Chase and an MS recipient from Carnegie Mellon’s Language Technologies Institute.