Transformers and large language models (LLMs) are certainly enjoying their moment. We are all discussing them, testing them, outsourcing our tasks to them, fine-tuning them, and relying on them more and more. The AI industry, for lack of better term, in welcoming more and more practitioners by the day. We all need to consider learning more about these topics, whether or not wee are newcomers to the concepts.

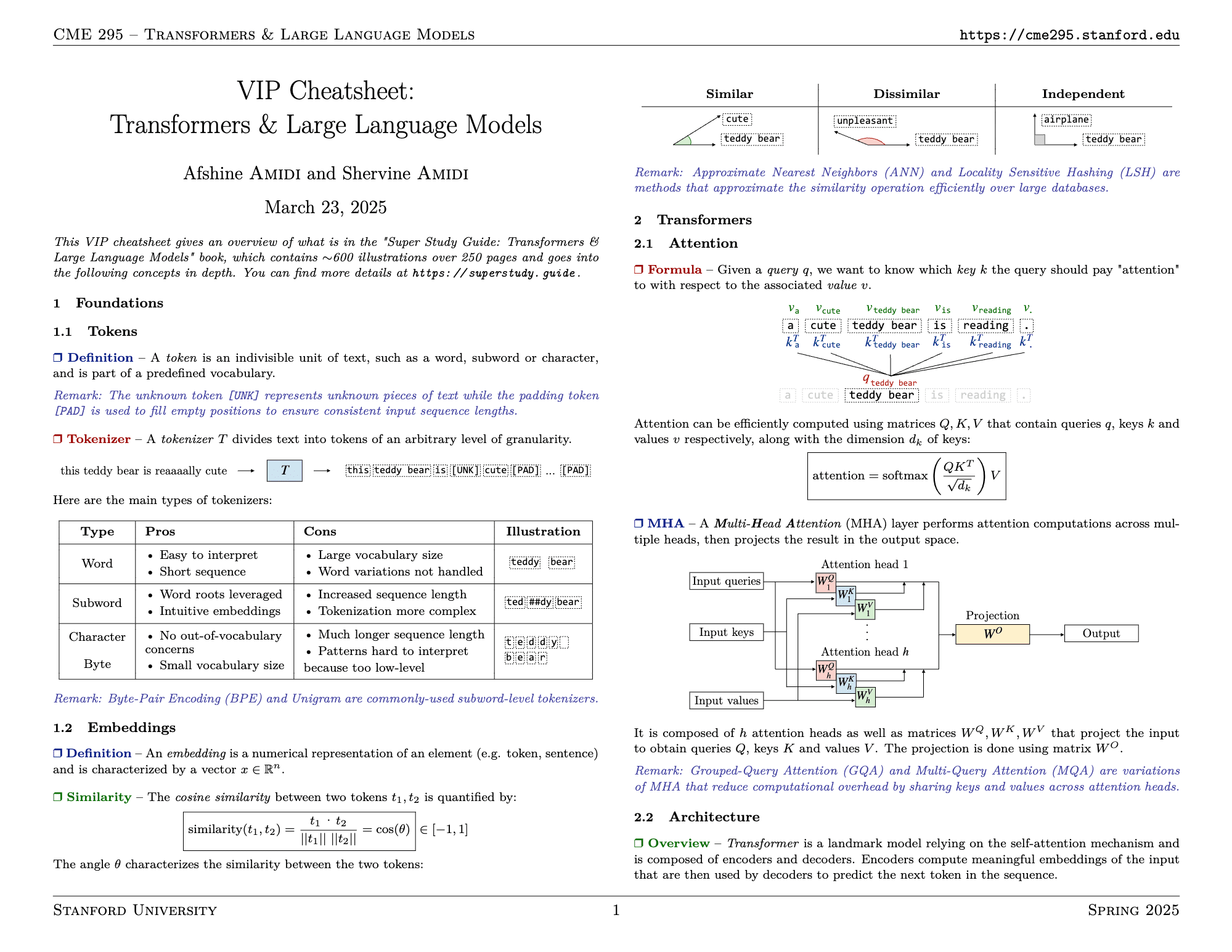

There are many courses and learning materials to facilitate this, but the Transformers & Large Language Models VIP cheatsheet created by Afshine and Shervine Amidi for Stanford’s CME 295 is a useful resource for a concise overview if that’s your style of learning. The Amidi brothers are highly-regarded researchers, authors, and educators in the area, and aside from teaching Stanford’s CME 295: Transformers & Large Language Models course, they have also used the text used in it. The cheatsheet actually provides an overview of what’s in their book Super Study Guide: Transformers & Large Language Models book, which contains ~600 illustrations over 250 pages which dive much deeper into the material.

This article introduces this cheatsheet in order to help you decide if it’s a worthwhile resource for you to use in your language model understanding pursuit.

Cheatsheet Overview

The cheatsheet provides a guide and concise yet in-depth explanation of transformers and LLM in four sections: foundations, transformers, LLM, and applications.

VIP Cheatsheet: Transformers & Large Language Models, by Afshine and Shervine Amidi

Let’s first have a look at the foundations, as they come first in the cheatsheet.

Foundations

The foundations section introduces two main concepts: tokens and embeddings.

Tokens are the smallest units of text used by language models. Depending on the unit we select, tokens can include words, subwords, or bytes, which are processed using a tokenizer. Each tokenizer has its pros and cons that we must also remember.

Embeddings are when the token is transformed into numerical vectors that capture semantic meaning. The similarity between tokens is measured using cosine similarity. Approximate methods like approximate nearest neighbors (ANN) & locality sensitive hashing (LSH) scale similarity searches in large embedding spaces.

Transformers

Transformers are the core of many modern LLMs. They use a self-attention mechanism that allows each token to pay attention to the other in the sequence. The cheat sheet also introduces many concepts, including:

- The multi-head attention (MHA) mechanism performs parallel attention calculations to generate outputs representing diverse text relationships.

- Transformers consist of stacks of encoders and/or decoders, which use position embeddings to understand word order.

- Several architectural variants are present, such as encoder-only models like BERT, which are good for classification; decoder-only models like GPT, which focus on text generation; and encoder-decoder models like T5, which excel in translation tasks.

- Optimizations such as flash attention, sparse attention, and low-rank approximations make the models more efficient.

Large Language Models

The LLM section discusses the model lifecycle, which consists of pre-training, supervised fine-tuning, and preference tuning. There are many additional concepts discussed as well, including:

- Prompting concepts such as context length and output can be controlled via temperature. It also discusses chain-of-thought (CoT) and tree-of-thought (ToT) techniques, which allow models to generate reasoning steps and solve complex tasks more effectively.

- Fine-tuning has many approaches, including supervised fine-tuning (SFT) and instruction tuning, along with more efficient methods such as LoRA and prefix tuning, which are shown under parameter-efficient fine-tuning (PEFT).

- Preference tuning aligns models using a reward model (RM), often learned via reinforcement learning from human feedback (RLHF) or direct preference optimization (DPO). These steps ensure that model outputs are accurate.

- Optimization techniques like MoE models reduce computation by activating a subset of model components. Distillation trains smaller models from larger ones, while quantization compresses weights for faster inference. QLoRA combines quantization and LoRA.

- LLM-as-a-Judge employs an LLM to assess outputs independently of references, which is beneficial for tasks that involve subjective evaluation.

- Retrieval-augmented generation (RAG) improves LLM responses by allowing them to access relevant external knowledge prior to generating text.

- LLM agents leverage ReAct to plan, observe, and act autonomously in chained tasks.

- Reasoning models address complex problems using structured reasoning and step-by-step thinking.

Applications

Lastly, the applications discuss four major use cases:

This is what you can expect to find in the VIP Cheatsheet: Transformers & Large Language Models. Transformers and language models are covered concisely, and are great for both review and introductory purposes. If you find yourself wanting deeper information on what is contained in the cheatsheet, you can always check out the Amidi brothers’ related book, Super Study Guide: Transformers & Large Language Models.

Visit the cheatsheet’s site to learn more and to get your own copy.

Cornellius Yudha Wijaya is a data science assistant manager and data writer. While working full-time at Allianz Indonesia, he loves to share Python and data tips via social media and writing media. Cornellius writes on a variety of AI and machine learning topics.