Image by Author

Everyone is focusing on building better LLMs (large language models), whereas Groq focuses on the infrastructure side of AI, making these large models faster.

In this tutorial, we will learn about Groq LPU Inference Engine and how to use it locally on your laptop using API and Jan AI. We will also integrate it in VSCode to help us generate code, refactor it, document it, and generate testing units. We will be creating our own AI coding assistant for free.

What is Groq LPU Inference Engine?

The Groq LPU (Language Processing Unit) Inference Engine is designed to generate fast responses for computationally intensive applications with a sequential component, such as LLMs.

Compared to CPU and GPU, LPU has greater computing capacity, which reduces the time it takes to predict a word, making sequences of text to be generated much faster. Moreover, LPU also deals with memory bottlenecks to deliver better performance on LLMs compared to GPUs.

In short, Groq LPU technology makes your LLMs super fast, enabling real-time AI applications. Read the Groq ISCA 2022 Paper to learn more about LPU architecture.

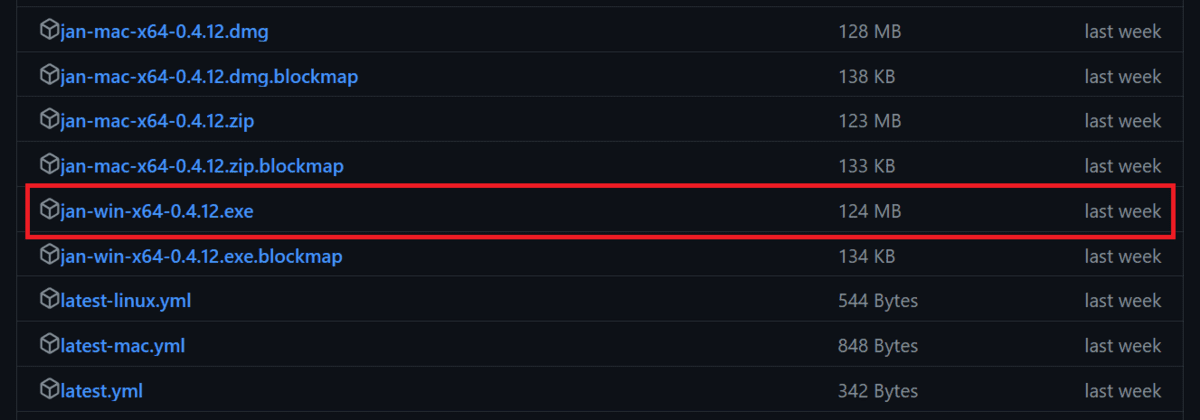

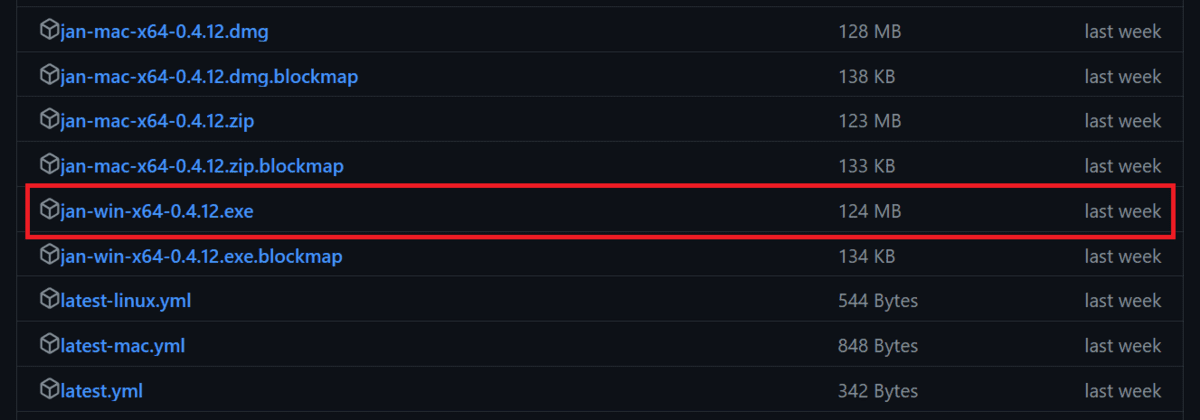

Installing Jan AI

Jan AI is a desktop application that runs open-source and proprietary large language models locally. It is available to download for Linux, macOS, and Windows. We will download and install Jan AI in Windows by going to the Releases · janhq/jan (github.com) and clicking on the file with the `.exe` extension.

If you want to use LLMs locally to enhance privacy, read the 5 Ways To Use LLMs On Your Laptop blog and start using top-of-the-line open-source Language models.

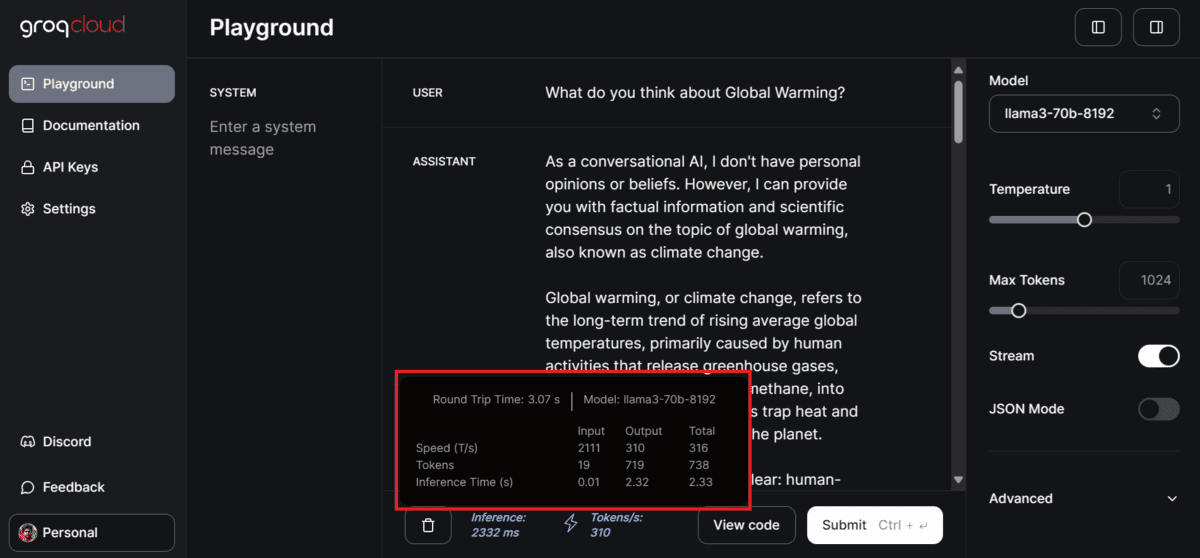

Creating the Groq Cloud API

To use Grog Llama 3 in Jan AI, we need an API. To do this, we will create a Groq Cloud account by going to https://console.groq.com/.

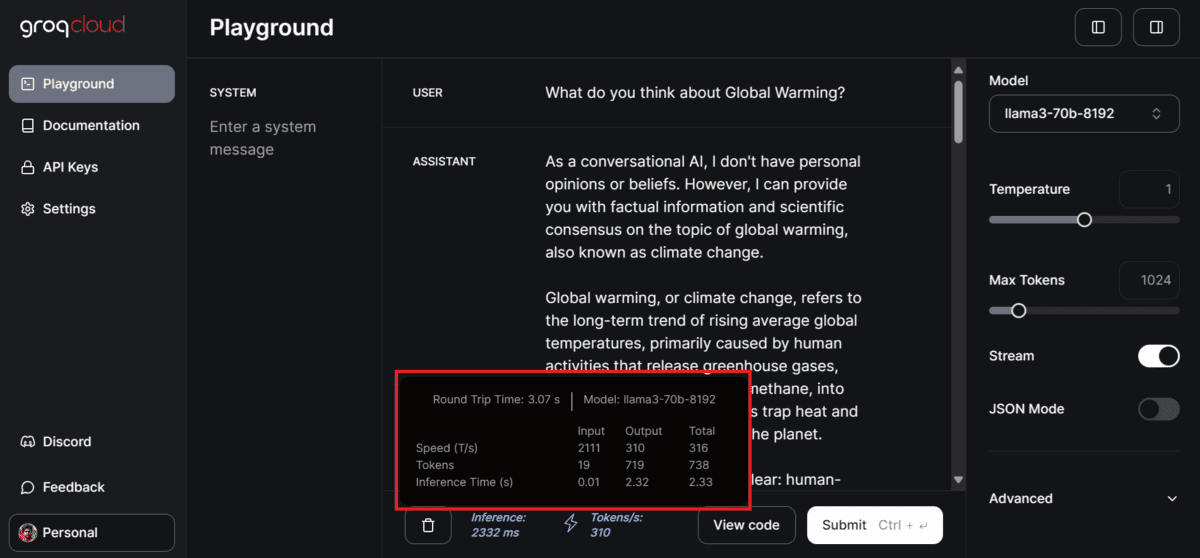

If you want to test the various models offered by Groq, you can do that without setting up anything by going to the “Playground” tab, selecting the model, and adding the user input.

In our case, it was super fast. It generated 310 tokens per second, which is by far the most I have seen in my life. Even Azure AI or OpenAI cannot produce this type of result.

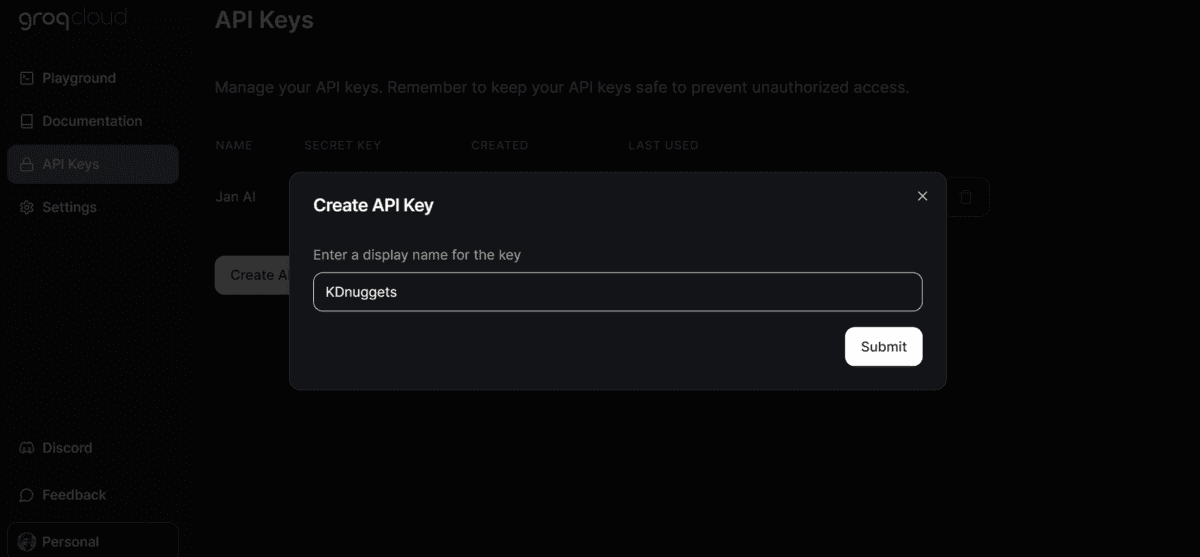

To generate the API key, click on the “API Keys” button on the left panel, then click on the “Create API Key” button to create and then copy the API key.

Using Groq in Jan AI

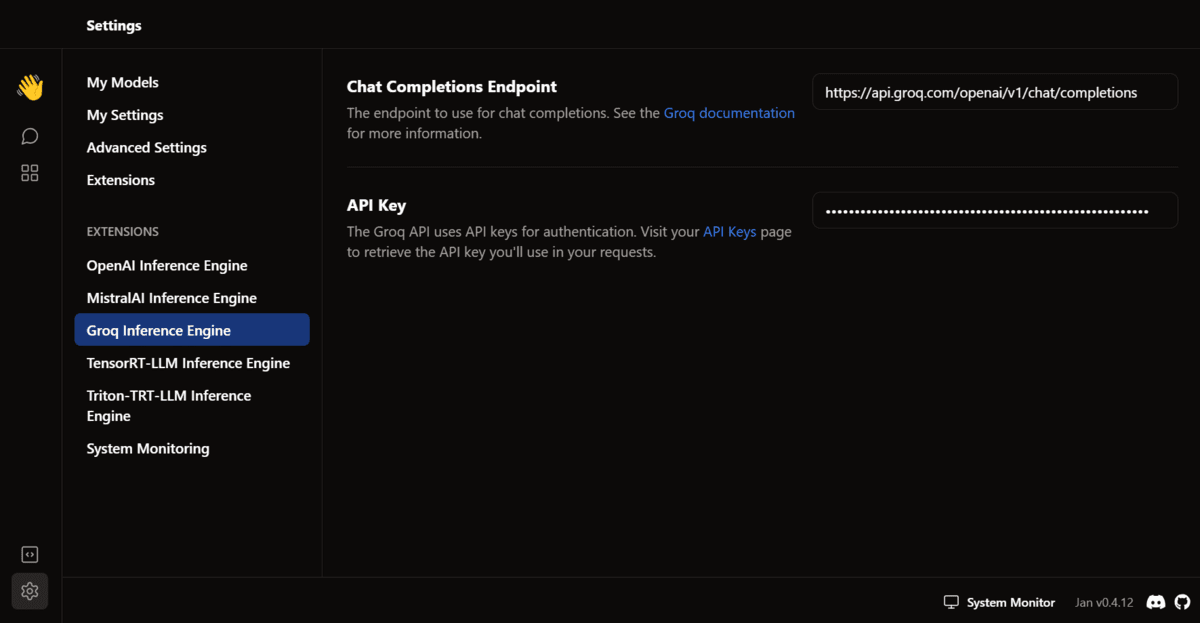

In the next step, we will paste the Groq Cloud API key into the Jan AI application.

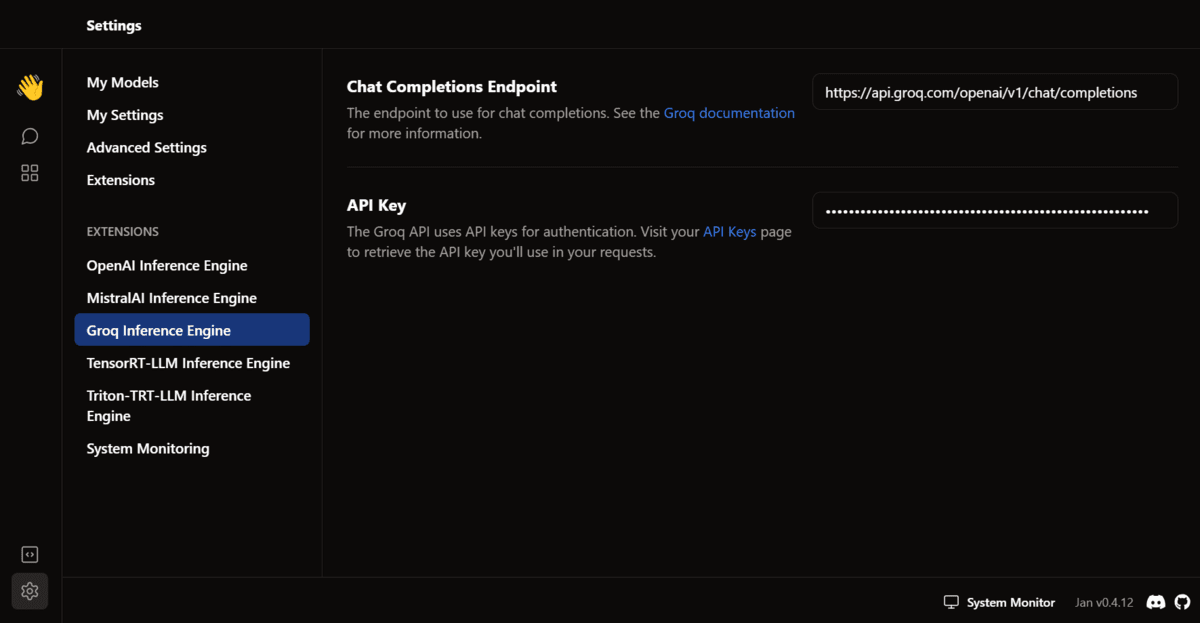

Launch the Jan AI application, go to the settings, select the “Groq Inference Engine” option in the extension section, and add the API key.

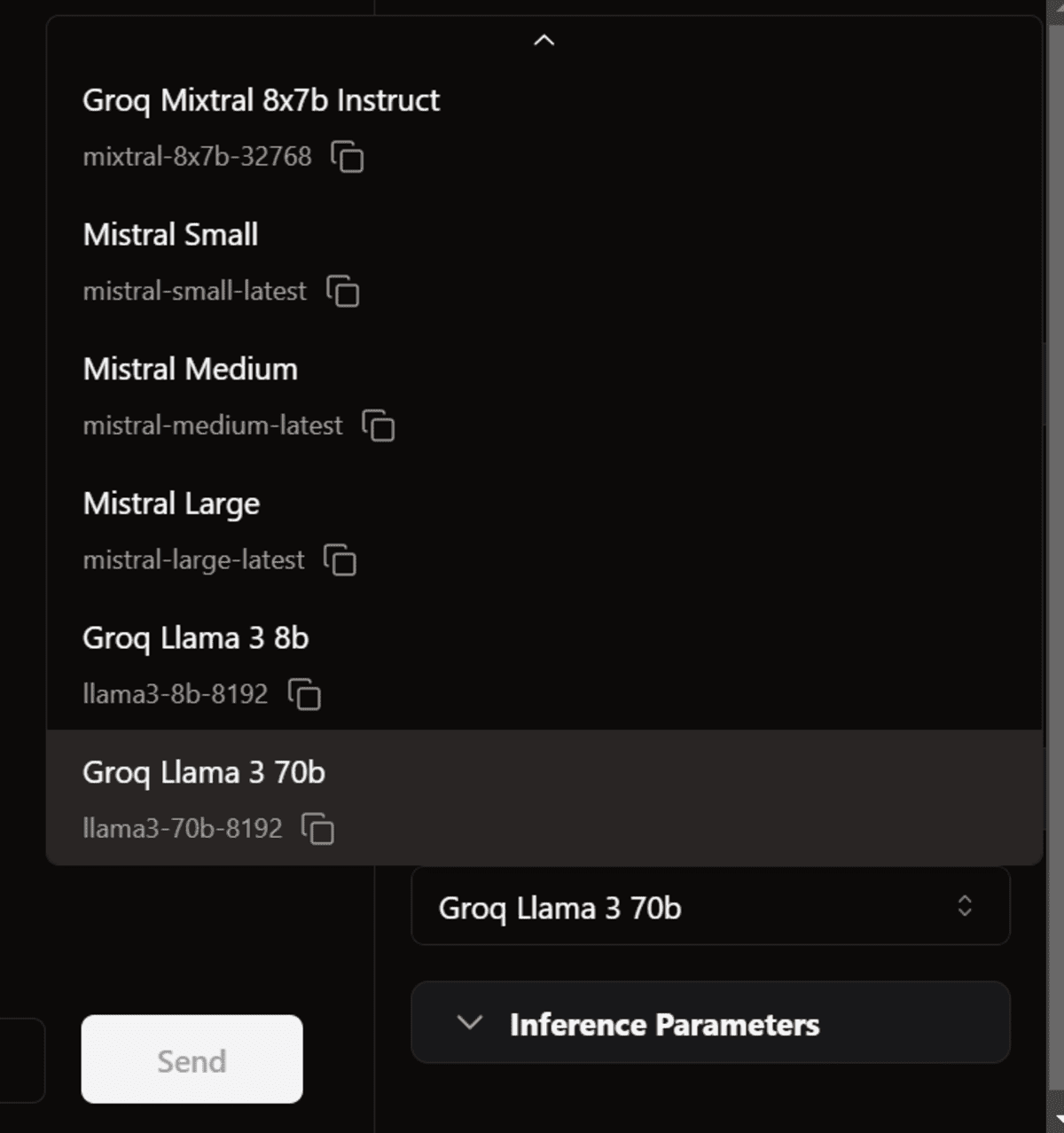

Then, go back to the thread window. In the model section, select the Groq Llama 3 70B in the “Remote” section and start prompting.

The response generation is so fast that I can’t even keep up with it.

Note: The free version of the API has some limitations. Visit https://console.groq.com/settings/limits to learn more about them.

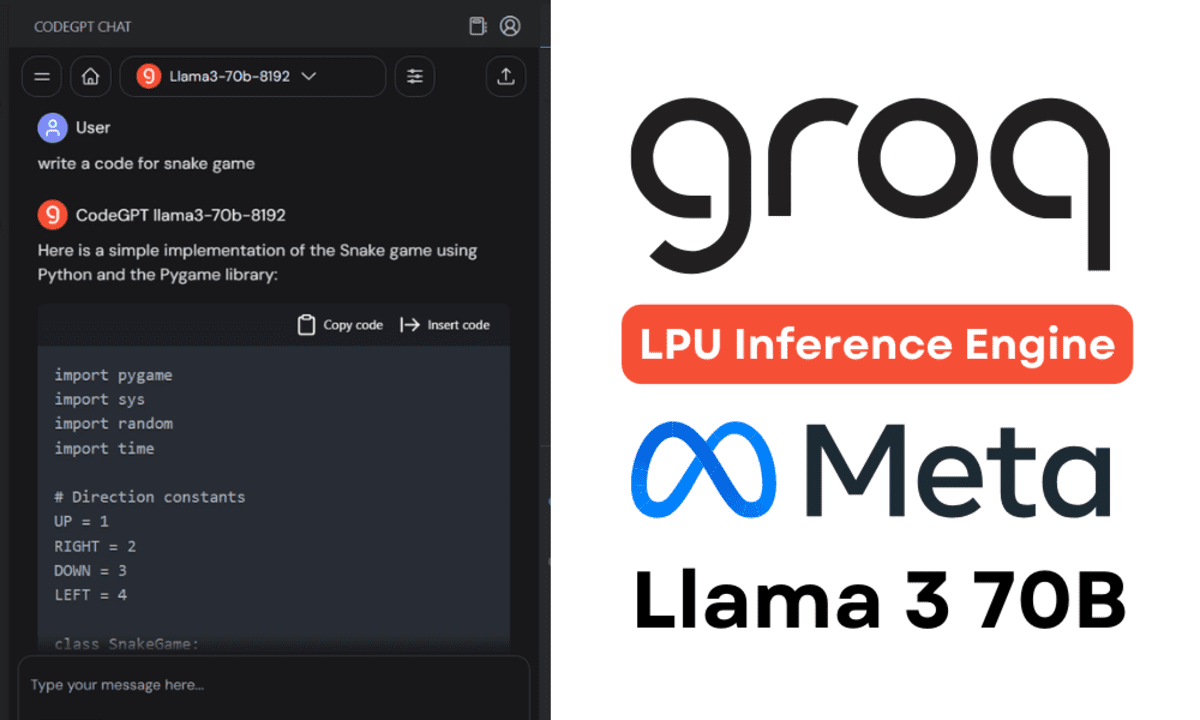

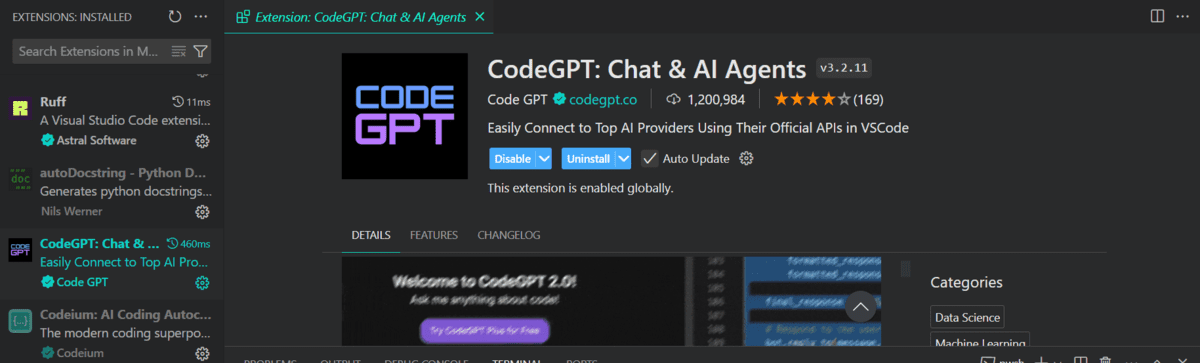

Using Groq in VSCode

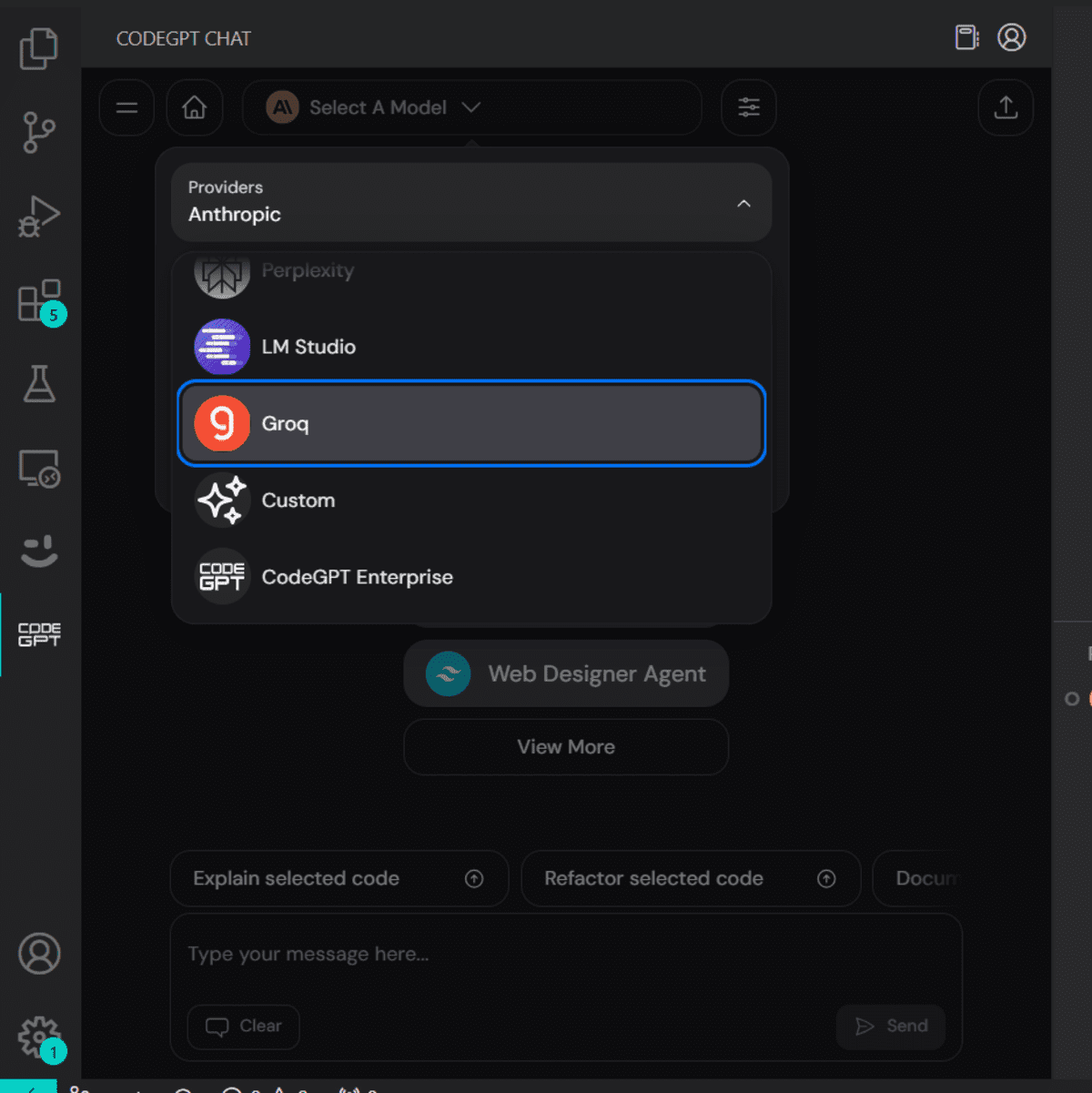

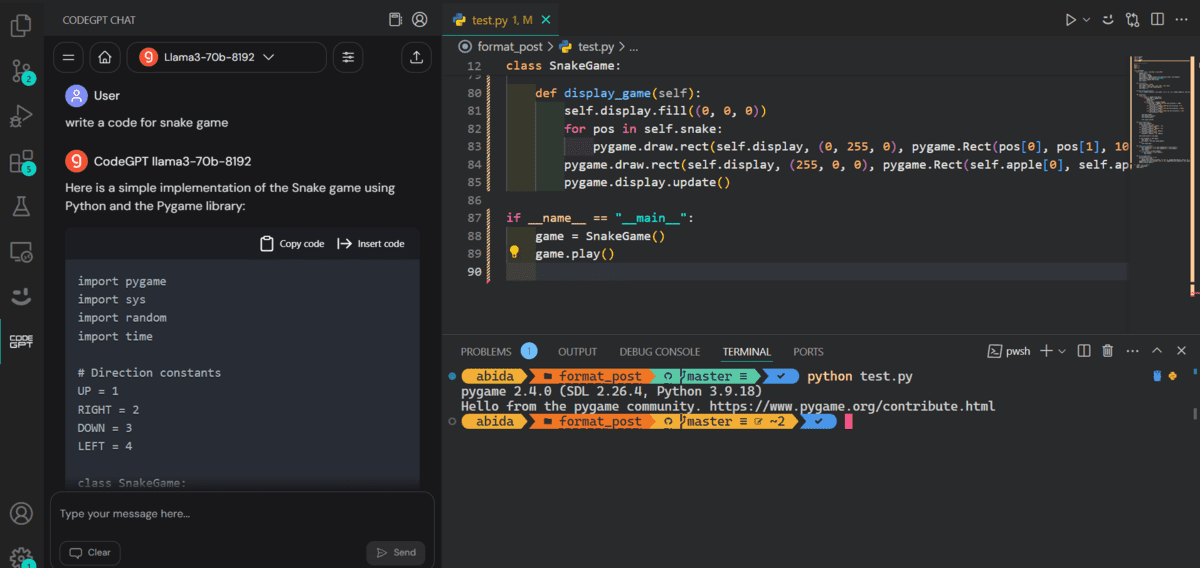

Next, we will try pasting the same API key into the CodeGPT VSCode extension and build our own free AI coding assistant.

Install the CodeGPT extension by searching it in the extension tab.

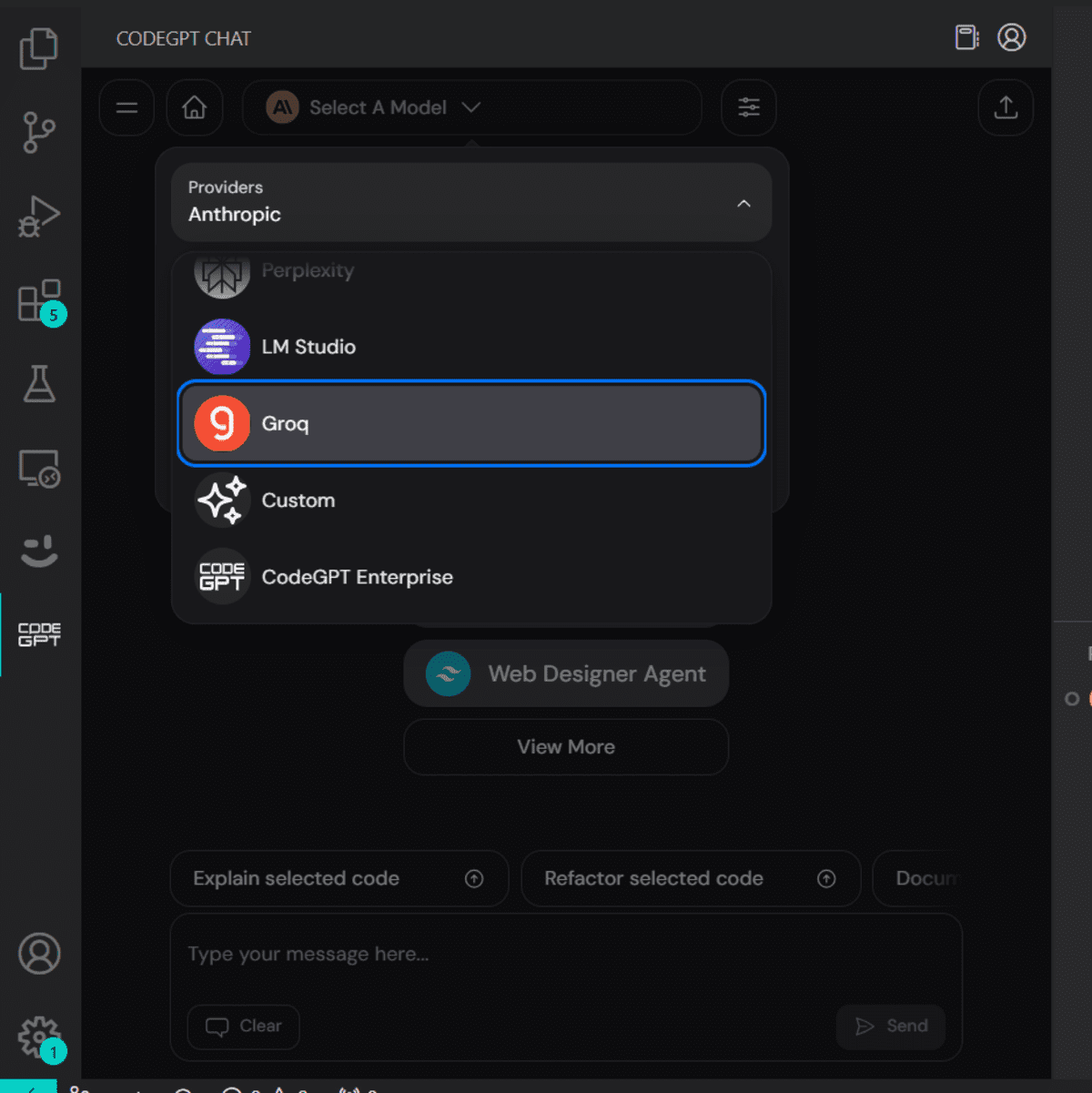

The CodeGPT tab will appear for you to select the model provider.

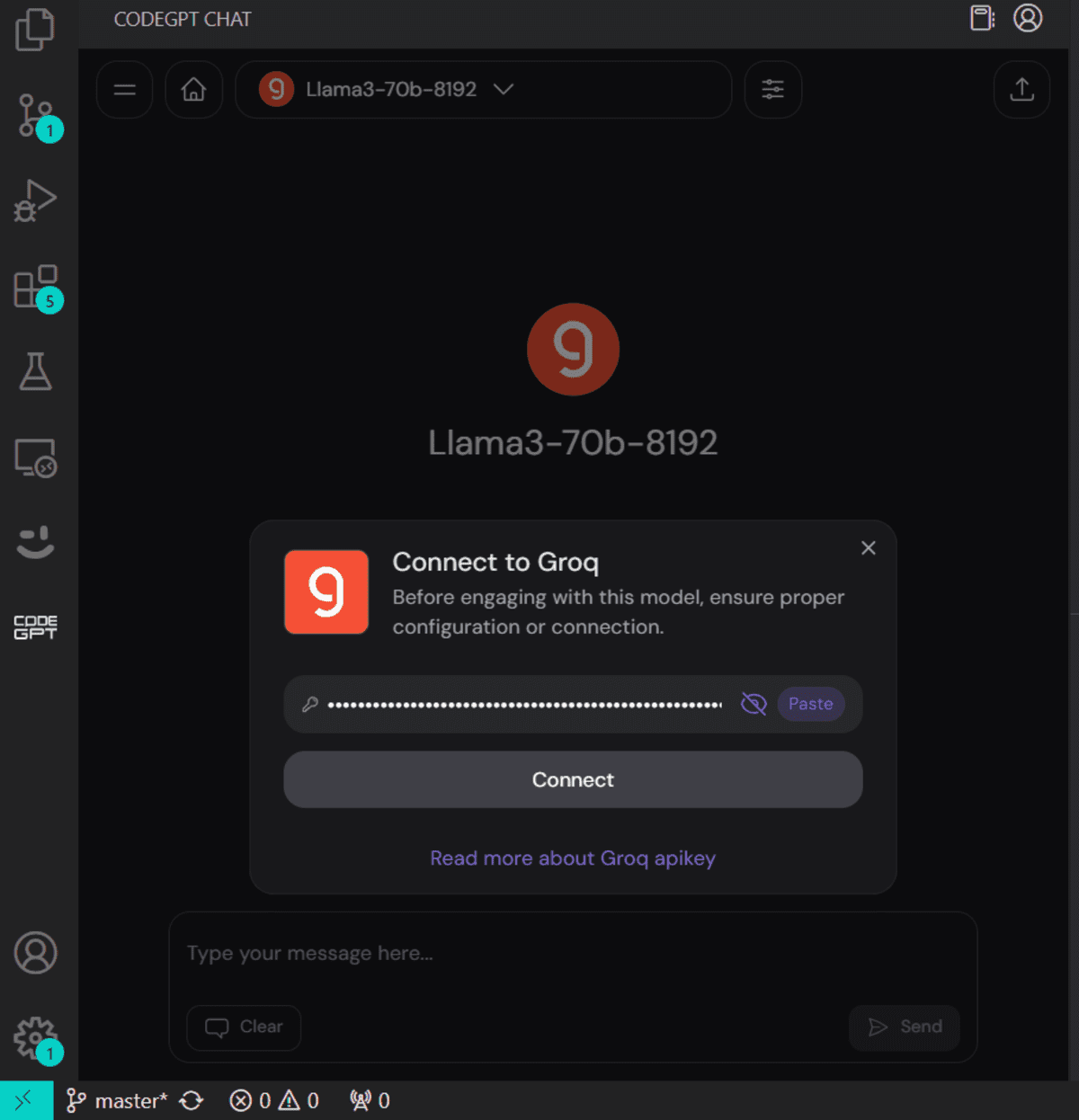

When you select Groq as a model provider it will ask you to provide an API key. Just paste the same API key and we are good to go. You can even generate another API key for CodeGPT.

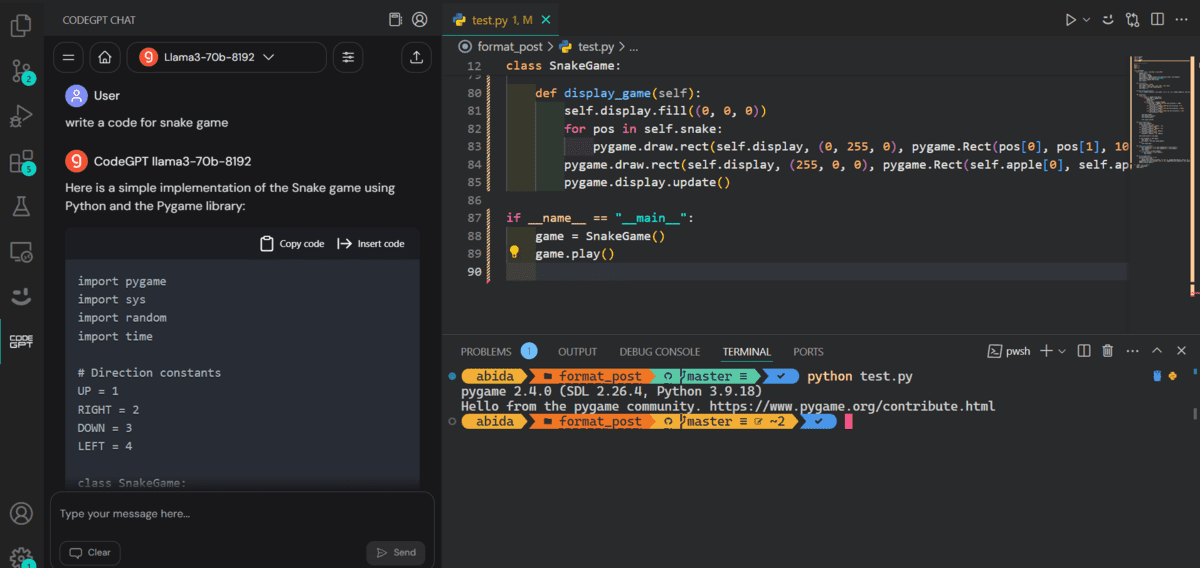

We will now ask it to write code for the snake game. It took 10 seconds to generate and then run the code.

Here is the demo of how our snake game is working.

Learn about the Top five AI Coding Assistants and become an AI-powered developer and data scientist. Remember, AI is here to assist us, not replace us, so be open to it and use it to improve your code writing.

Conclusion

In this tutorial, we learned about Groq Inference Engine and how to access it locally using the Jan AI Windows application. To top it off, we have integrated it into our workflow by using CodeGPT VSCode extensions, which is awesome. It generates responses in real time for a better development experience.

Now, most companies will develop their own Inference engineers to match Groq’s speed. Otherwise, Groq will take the crown in a few months.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.